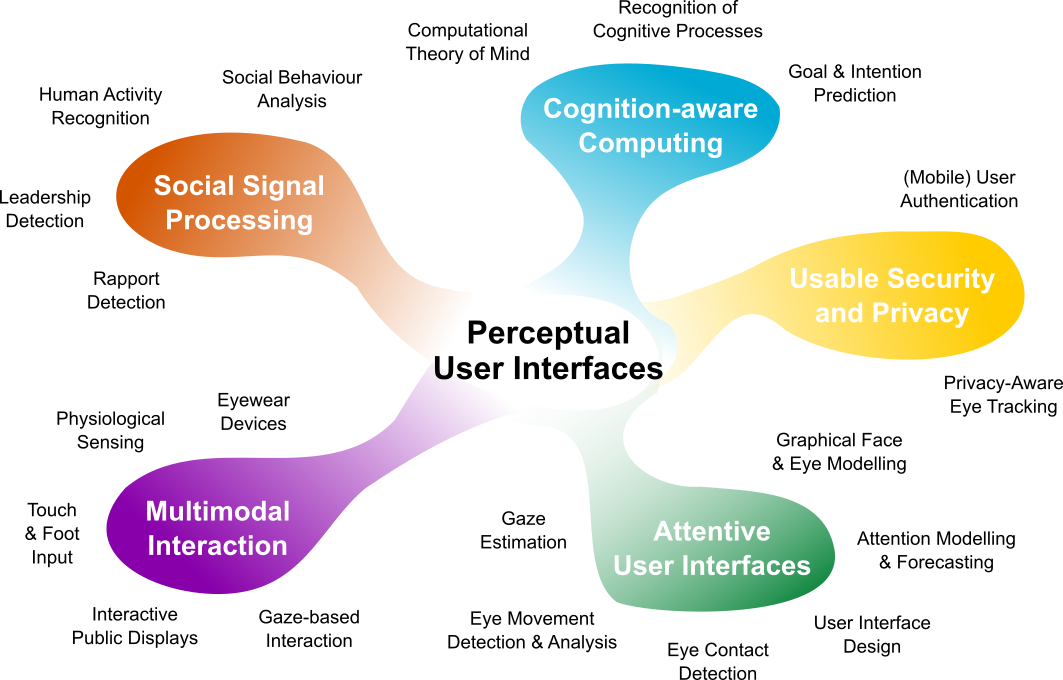

Our goal is to develop next-generation human-machine interfaces that offer human-like interactive capabilities. To this end, we research fundamental computational methods as well as ambient and on-body systems to sense, model, and analyse everyday non-verbal human behavior and cognition.

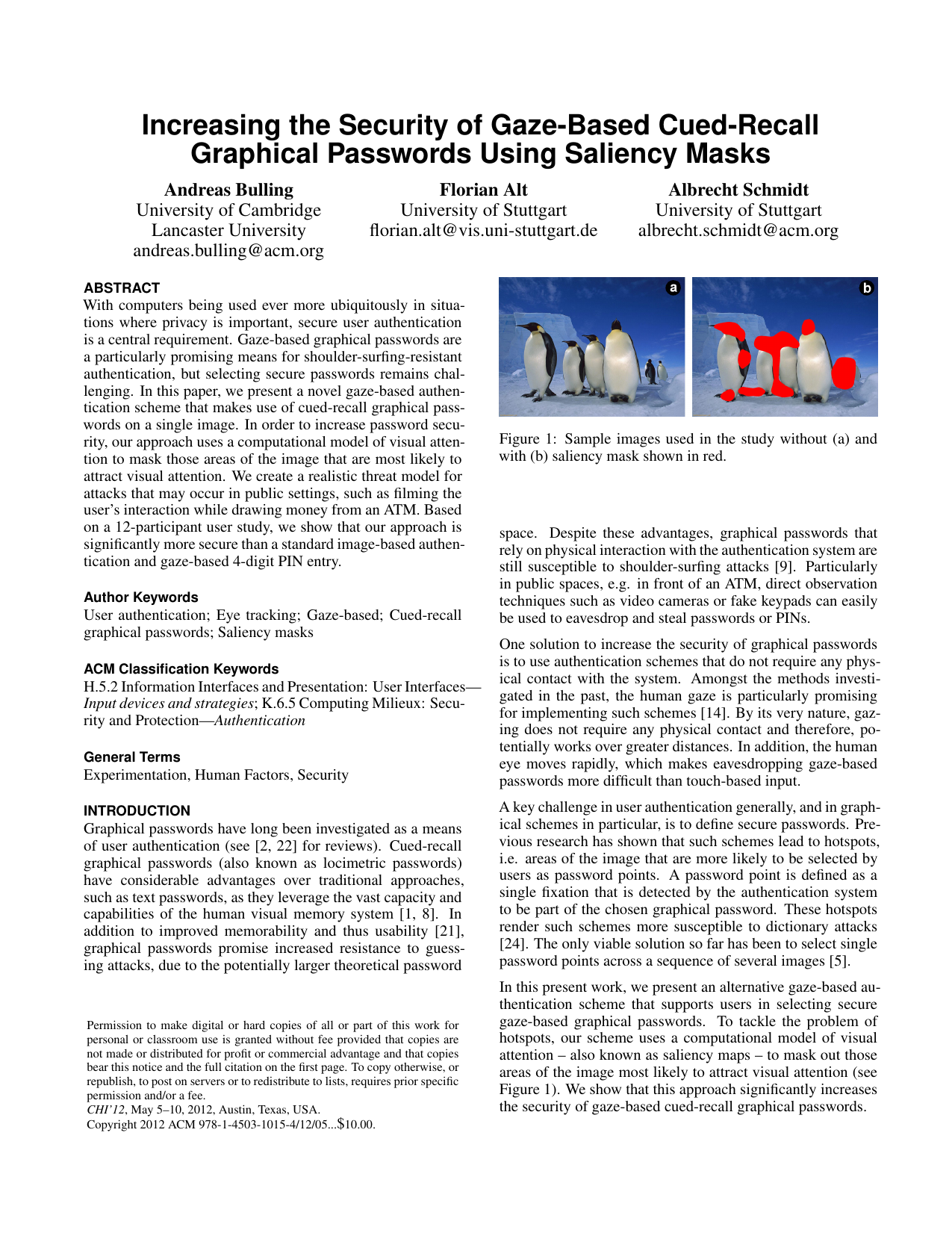

Our research towards this goal spans five main areas: Cognition-aware Computing, Social Signal Processing, Multimodal Interaction, Attentive User Interfaces, and Usable Security and Privacy.

Please find selected key publications for each area below.

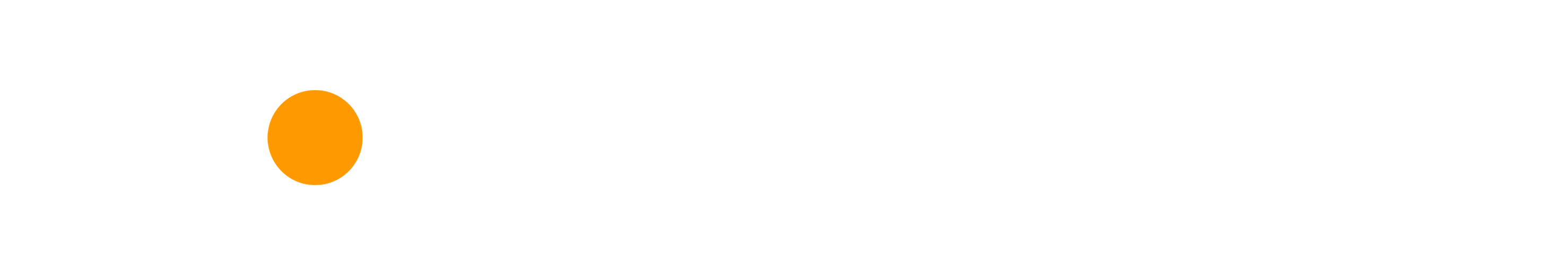

Cognition-aware Computing

-

Neural Reasoning About Agents’ Goals, Preferences, and Actions

Proc. 38th AAAI Conference on Artificial Intelligence (AAAI), pp. 1–13, 2024.

-

Usable and Fast Interactive Mental Face Reconstruction

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 1–15, 2023.

-

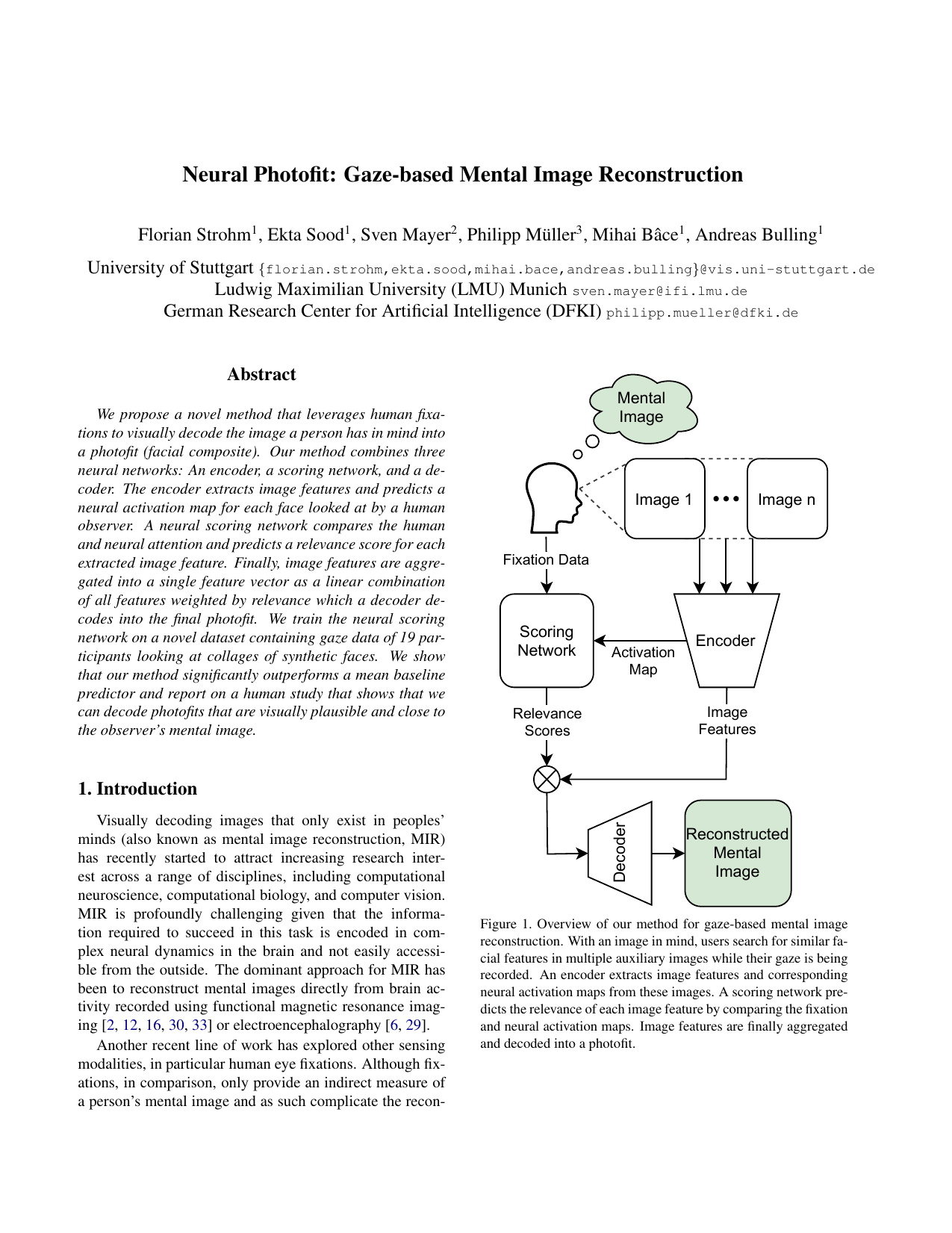

Neural Photofit: Gaze-based Mental Image Reconstruction

Proc. IEEE International Conference on Computer Vision (ICCV), pp. 245-254, 2021.

-

Deep Gaze Pooling: Inferring and Visually Decoding Search Intents From Human Gaze Fixations

Neurocomputing, 387, pp. 369–382, 2020.

-

Improving Natural Language Processing Tasks with Human Gaze-Guided Neural Attention

Advances in Neural Information Processing Systems (NeurIPS), pp. 1–15, 2020.

-

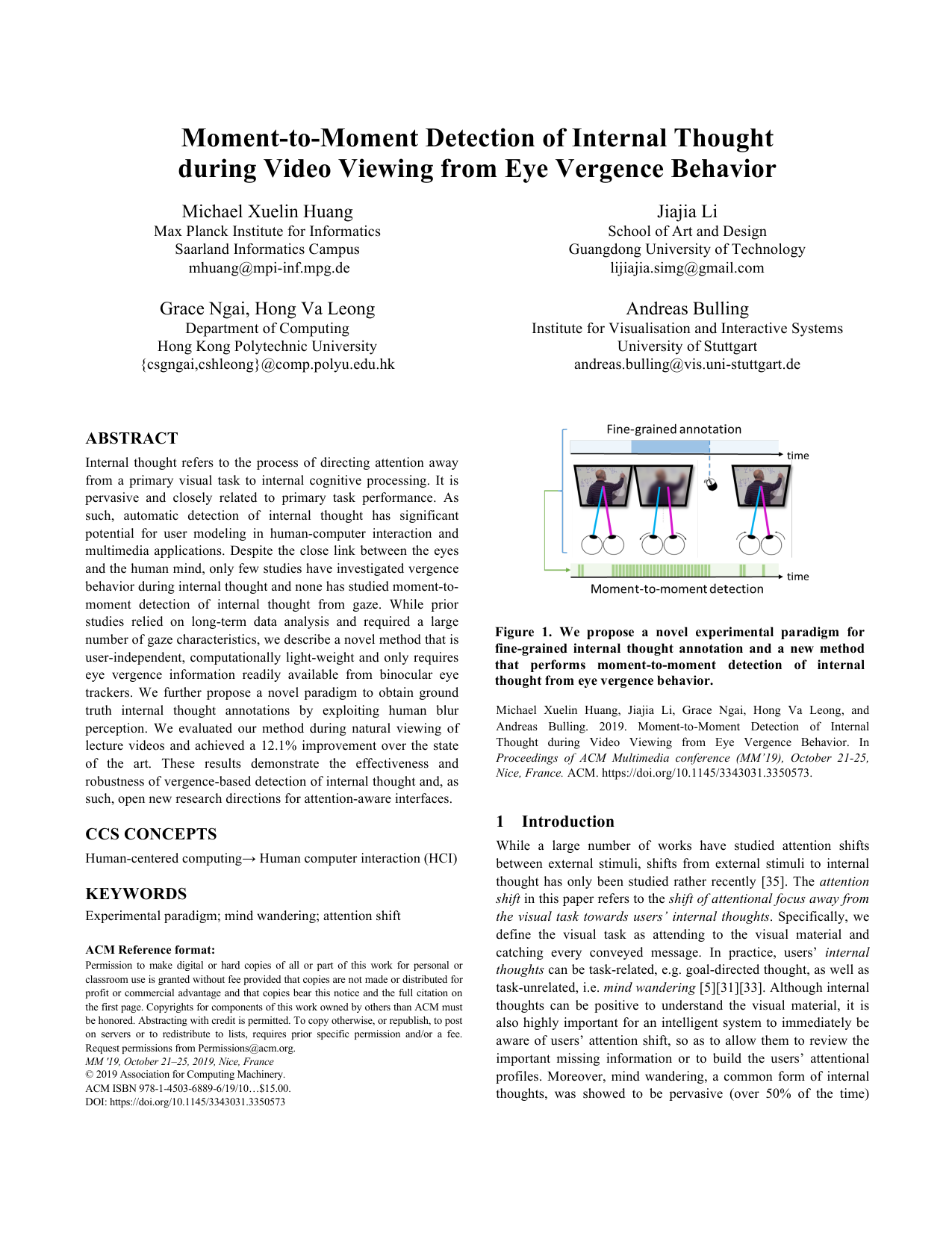

Moment-to-Moment Detection of Internal Thought during Video Viewing from Eye Vergence Behavior

Proc. ACM Multimedia (MM), pp. 1–9, 2019.

-

Eye movements during everyday behavior predict personality traits

Frontiers in Human Neuroscience, 12, pp. 1–8, 2018.

-

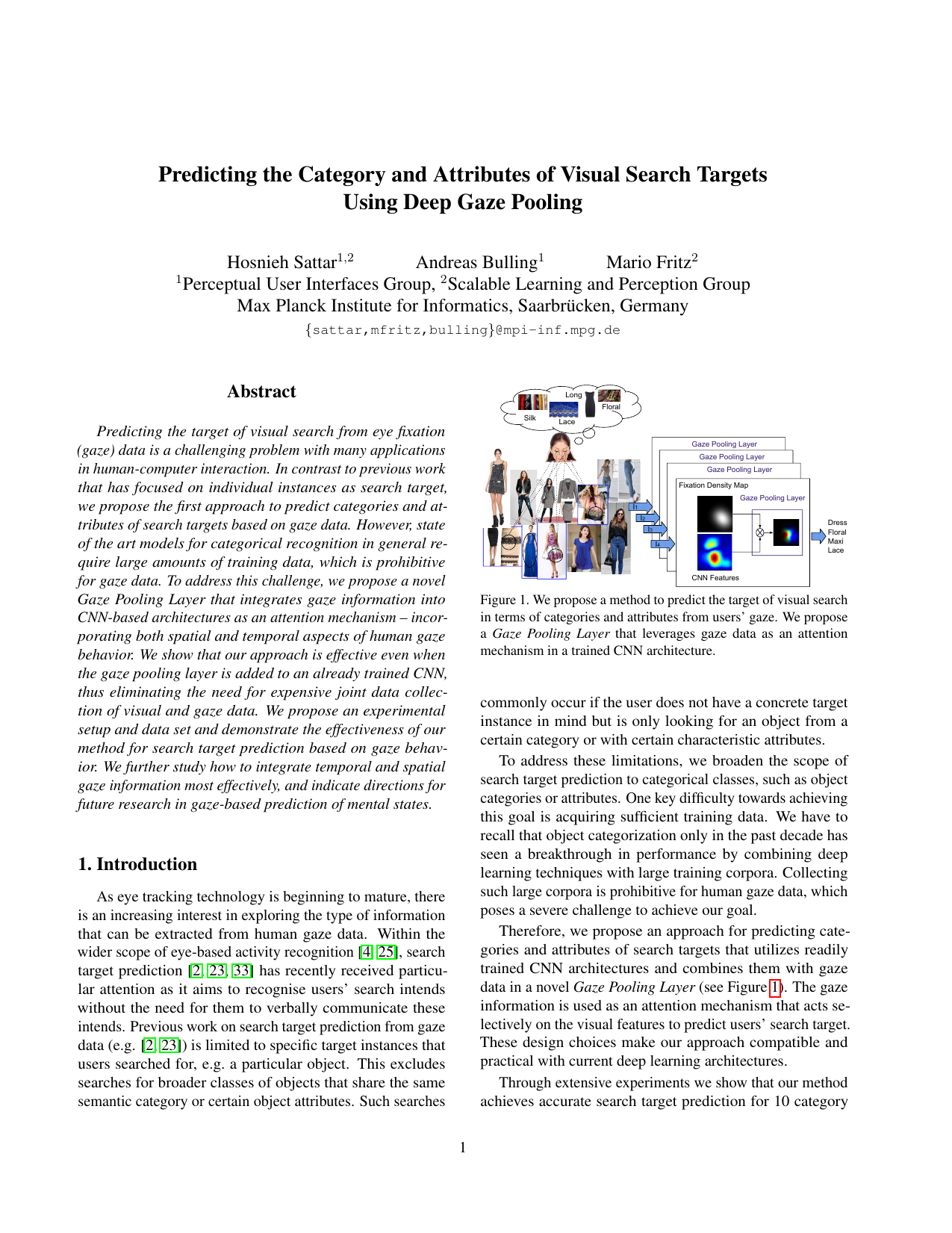

Predicting the Category and Attributes of Visual Search Targets Using Deep Gaze Pooling

Proc. IEEE International Conference on Computer Vision Workshops (ICCVW), pp. 2740-2748, 2017.

-

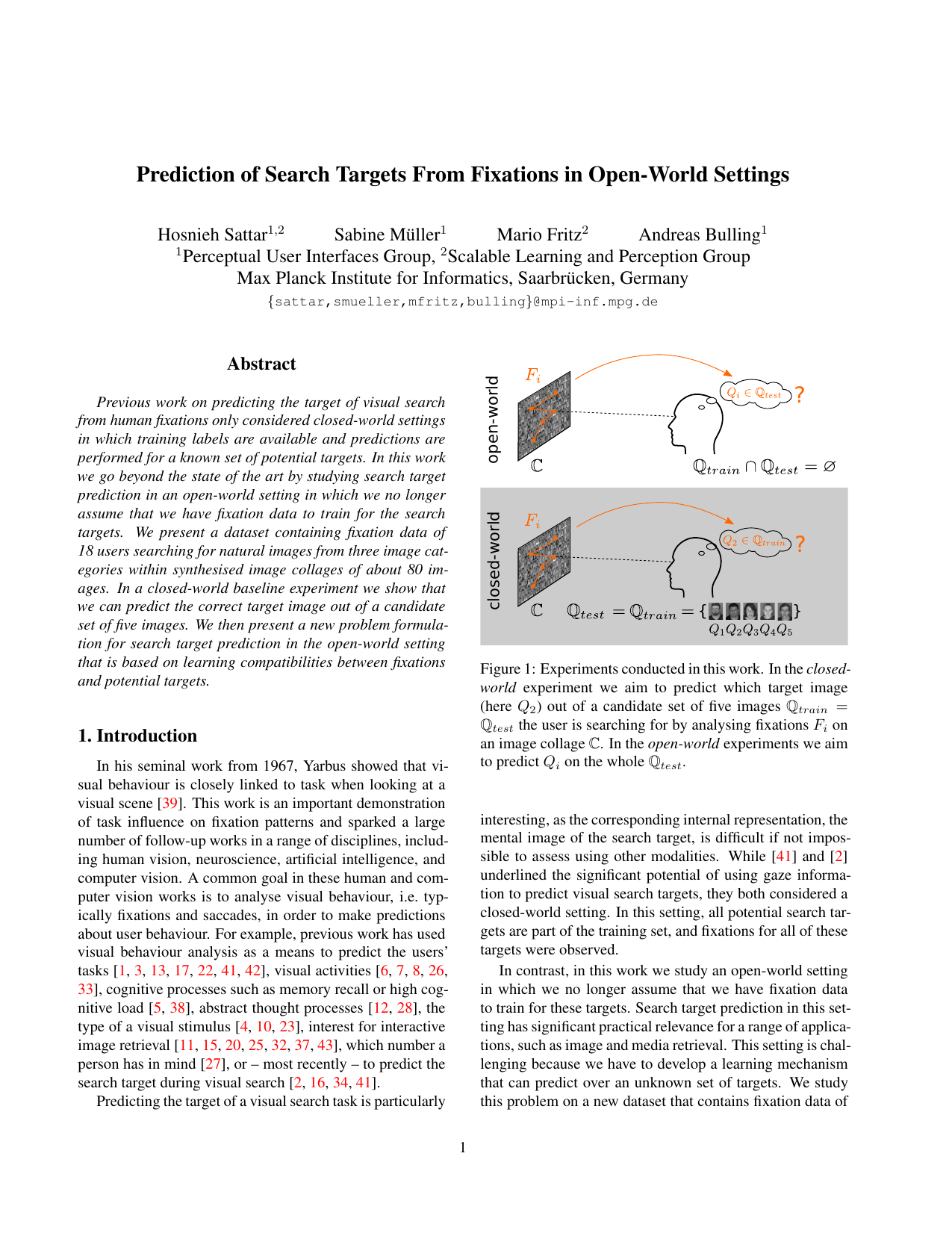

Prediction of Search Targets From Fixations in Open-world Settings

Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 981-990, 2015.

Social Signal Processing

-

ConAn: A Usable Tool for Multimodal Conversation Analysis

Proc. ACM International Conference on Multimodal Interaction (ICMI), pp. 341-351, 2021.

-

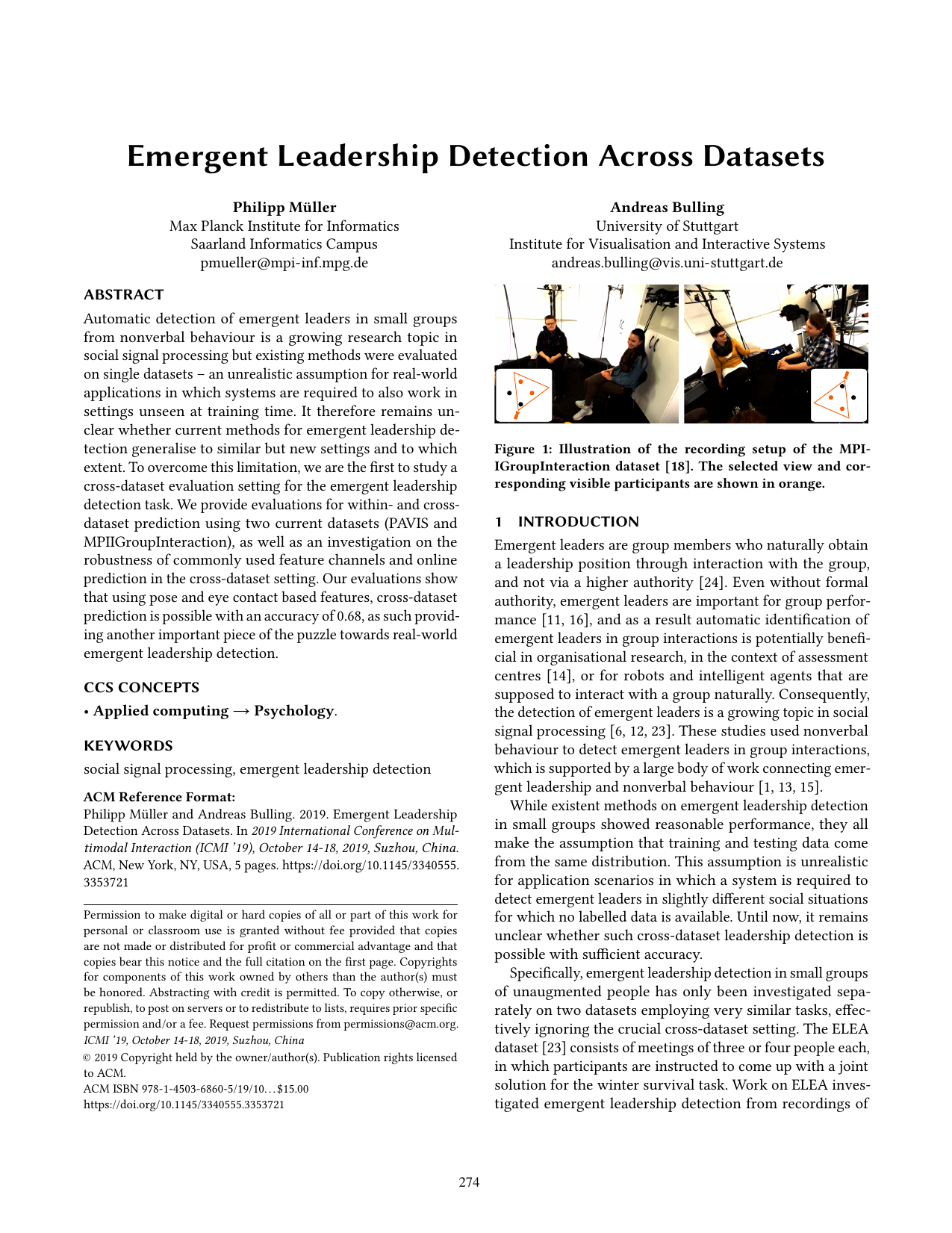

Emergent Leadership Detection Across Datasets

Proc. ACM International Conference on Multimodal Interaction (ICMI), pp. 274-278, 2019.

-

Detecting Low Rapport During Natural Interactions in Small Groups from Non-Verbal Behavior

Proc. ACM International Conference on Intelligent User Interfaces (IUI), pp. 153-164, 2018.

-

Robust Eye Contact Detection in Natural Multi-Person Interactions Using Gaze and Speaking Behaviour

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–10, 2018.

-

Discovery of Everyday Human Activities From Long-term Visual Behaviour Using Topic Models

Proc. ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp), pp. 75-85, 2015.

-

Emotion recognition from embedded bodily expressions and speech during dyadic interactions

Proc. International Conference on Affective Computing and Intelligent Interaction (ACII), pp. 663-669, 2015.

-

The Royal Corgi: Exploring Social Gaze Interaction for Immersive Gameplay

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 115-124, 2015.

-

A Tutorial on Human Activity Recognition Using Body-worn Inertial Sensors

ACM Computing Surveys, 46(3), pp. 1–33, 2014.

-

EyeContext: Recognition of High-level Contextual Cues from Human Visual Behaviour

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 305-308, 2013.

-

AutoBAP: Automatic Coding of Body Action and Posture Units from Wearable Sensors

Proc. Humaine Association Conference on Affective Computing and Intelligent Interaction (ACII), pp. 135-140, 2013.

-

MotionMA: Motion Modelling and Analysis by Demonstration

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1309-1318, 2013.

-

Multimodal Recognition of Reading Activity in Transit Using Body-Worn Sensors

ACM Transactions on Applied Perception (TAP), 9(1), pp. 1–21, 2012.

-

Eye Movement Analysis for Activity Recognition Using Electrooculography

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 33(4), pp. 741-753, 2011.

Multimodal Interaction

-

Mouse2Vec: Learning Reusable Semantic Representations of Mouse Behaviour

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–17, 2024.

-

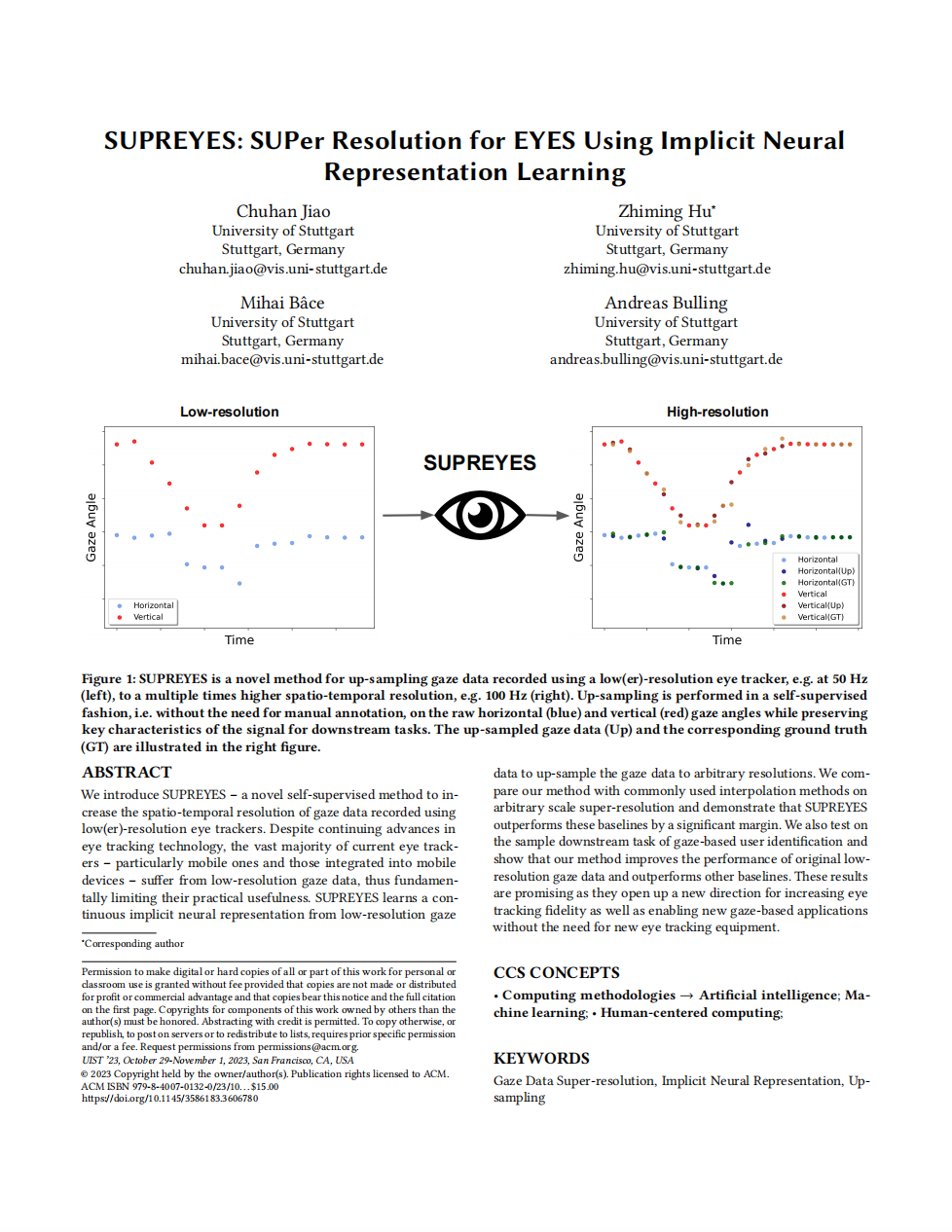

SUPREYES: SUPer Resolution for EYES Using Implicit Neural Representation Learning

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 1–13, 2023.

-

Understanding, Addressing, and Analysing Digital Eye Strain in Virtual Reality Head-Mounted Displays

ACM Transactions on Computer-Human Interaction (TOCHI), 29(4), pp. 1-80, 2022.

-

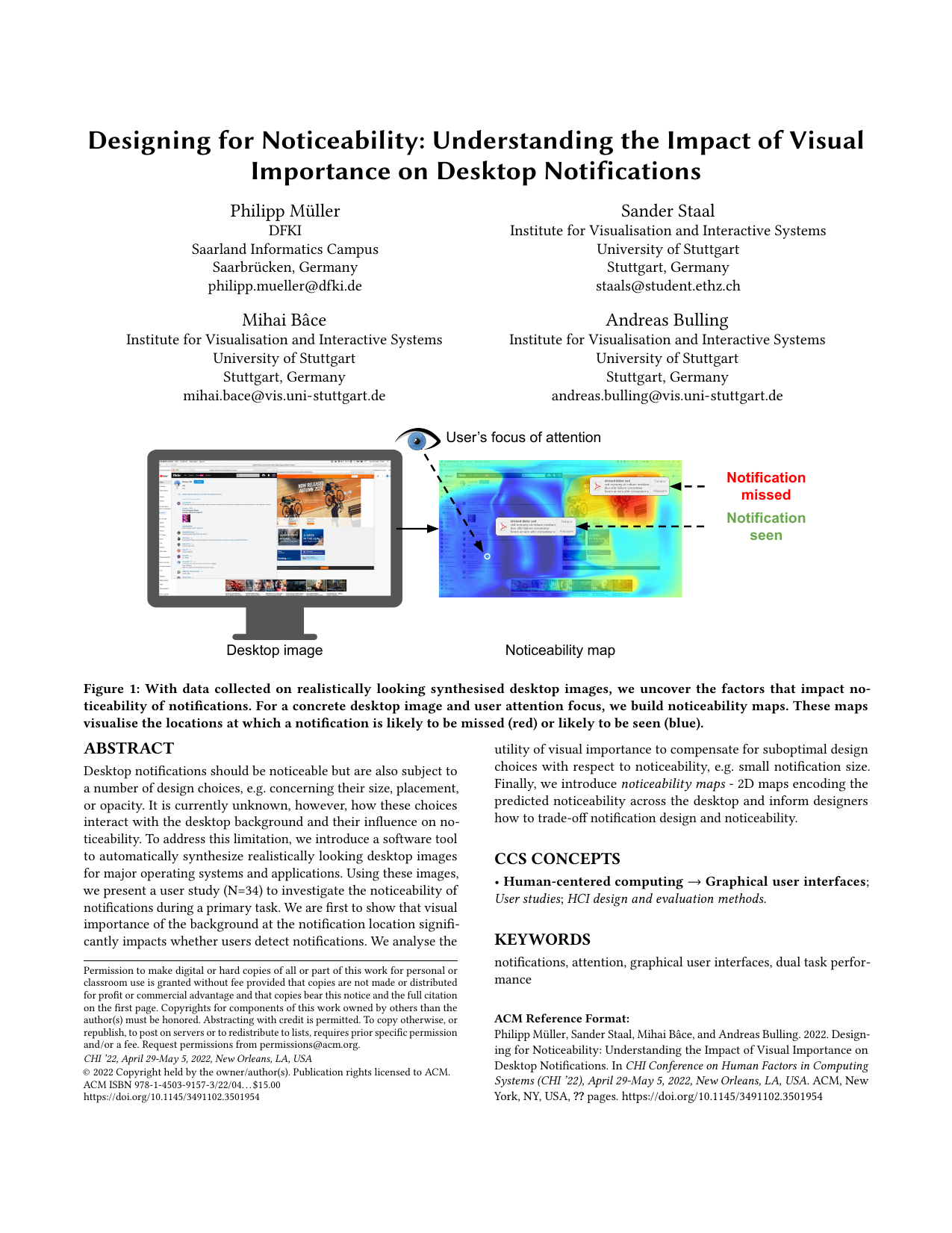

Designing for Noticeability: The Impact of Visual Importance on Desktop Notifications

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–13, 2022.

-

A Critical Assessment of the Use of SSQ as a Measure of General Discomfort in VR Head-Mounted Displays

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–14, 2021.

-

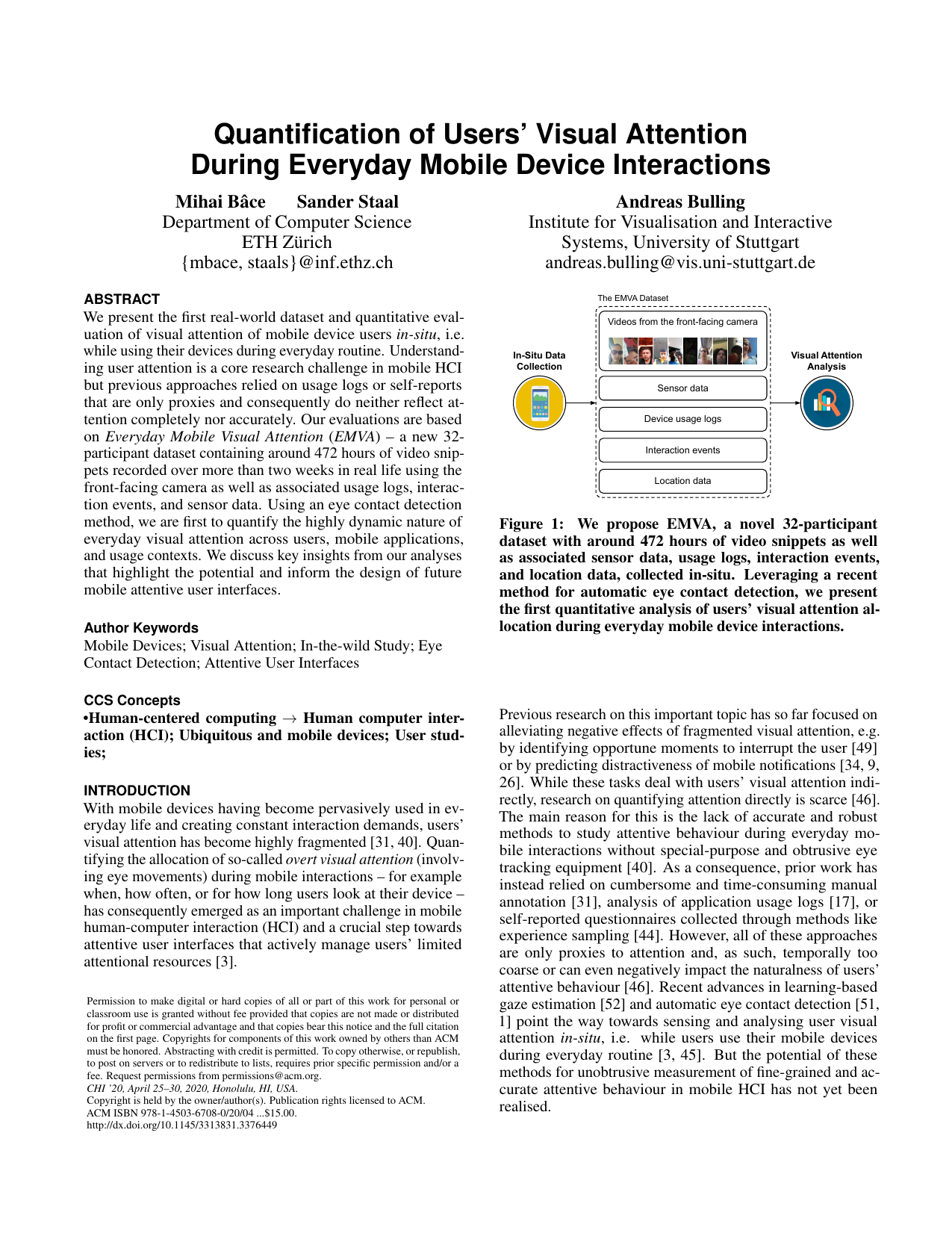

Quantification of Users’ Visual Attention During Everyday Mobile Device Interactions

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–14, 2020.

-

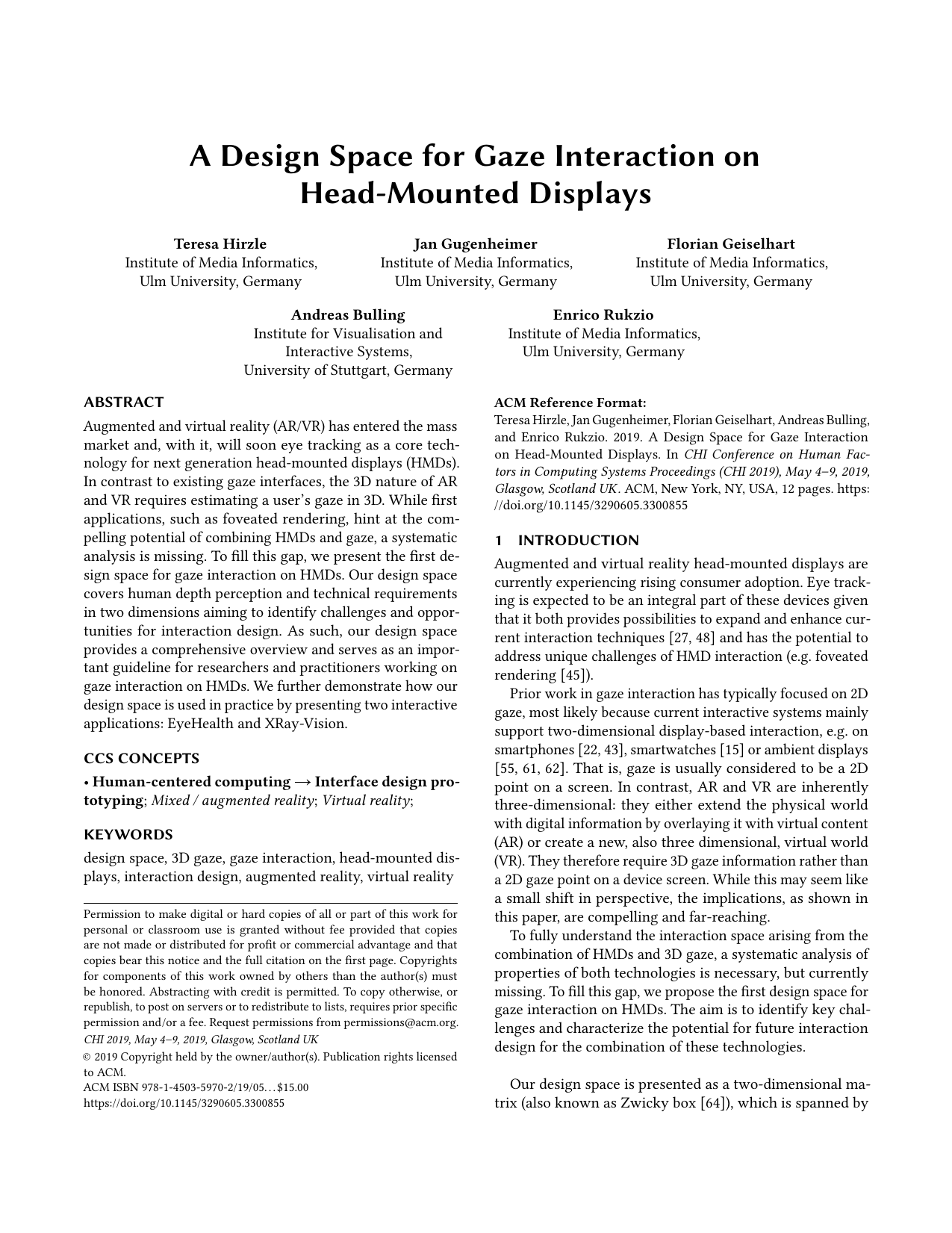

A Design Space for Gaze Interaction on Head-mounted Displays

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–12, 2019.

-

Which one is me? Identifying Oneself on Public Displays

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–12, 2018.

-

The Past, Present, and Future of Gaze-enabled Handheld Mobile Devices: Survey and Lessons Learned

Proc. ACM International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI), pp. 1–17, 2018.

-

Look together: using gaze for assisting co-located collaborative search

Personal and Ubiquitous Computing, 21(1), pp. 173-186, 2017.

-

EyePACT: Eye-Based Parallax Correction on Touch-Enabled Interactive Displays

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), 1(4), pp. 1–18, 2017.

-

InvisibleEye: Mobile Eye Tracking Using Multiple Low-Resolution Cameras and Learning-Based Gaze Estimation

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), 1(3), pp. 1–21, 2017.

-

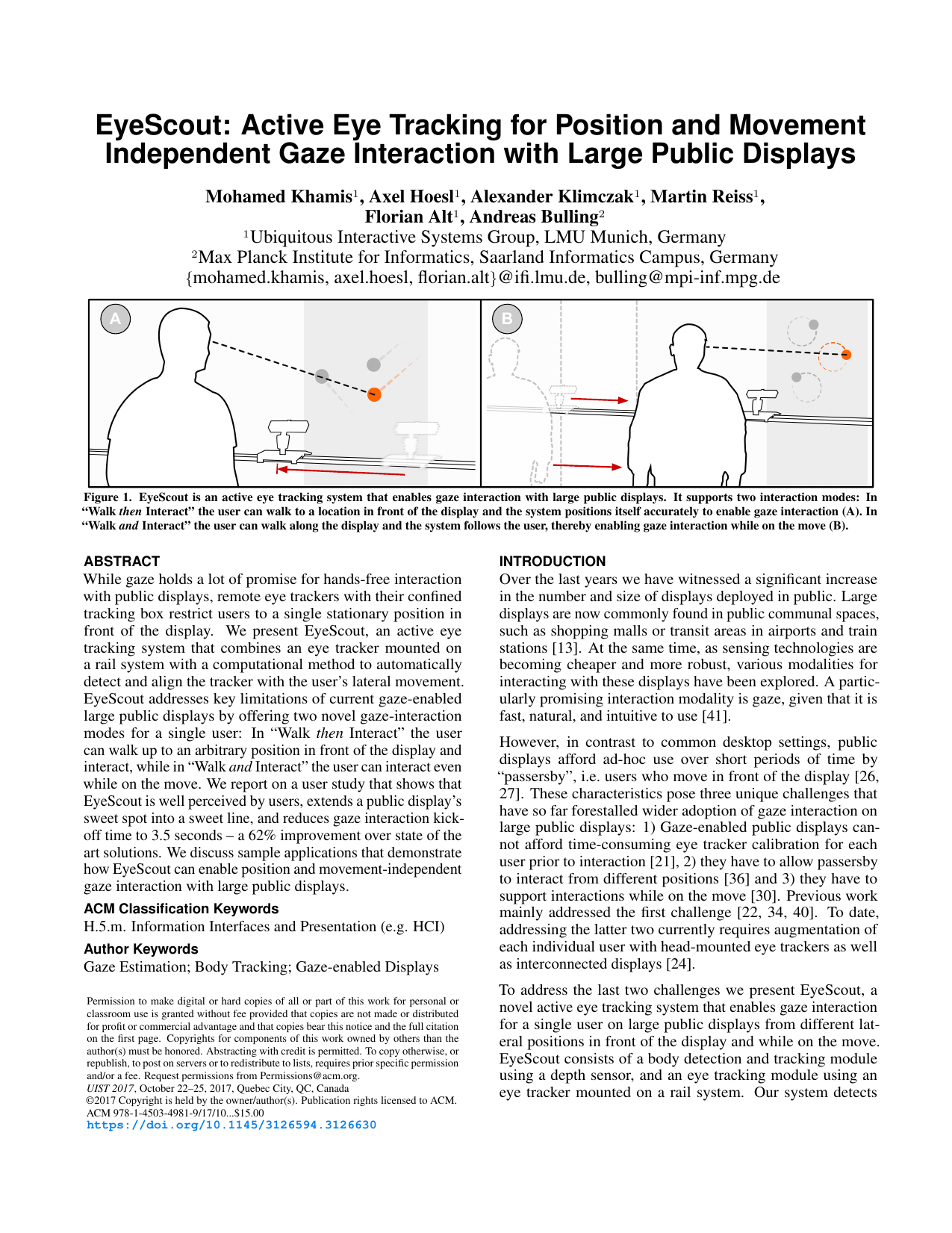

EyeScout: Active Eye Tracking for Position and Movement Independent Gaze Interaction with Large Public Displays

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 155-166, 2017.

-

TextPursuits: Using Text for Pursuits-Based Interaction and Calibration on Public Displays

Proc. ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp), pp. 274-285, 2016.

-

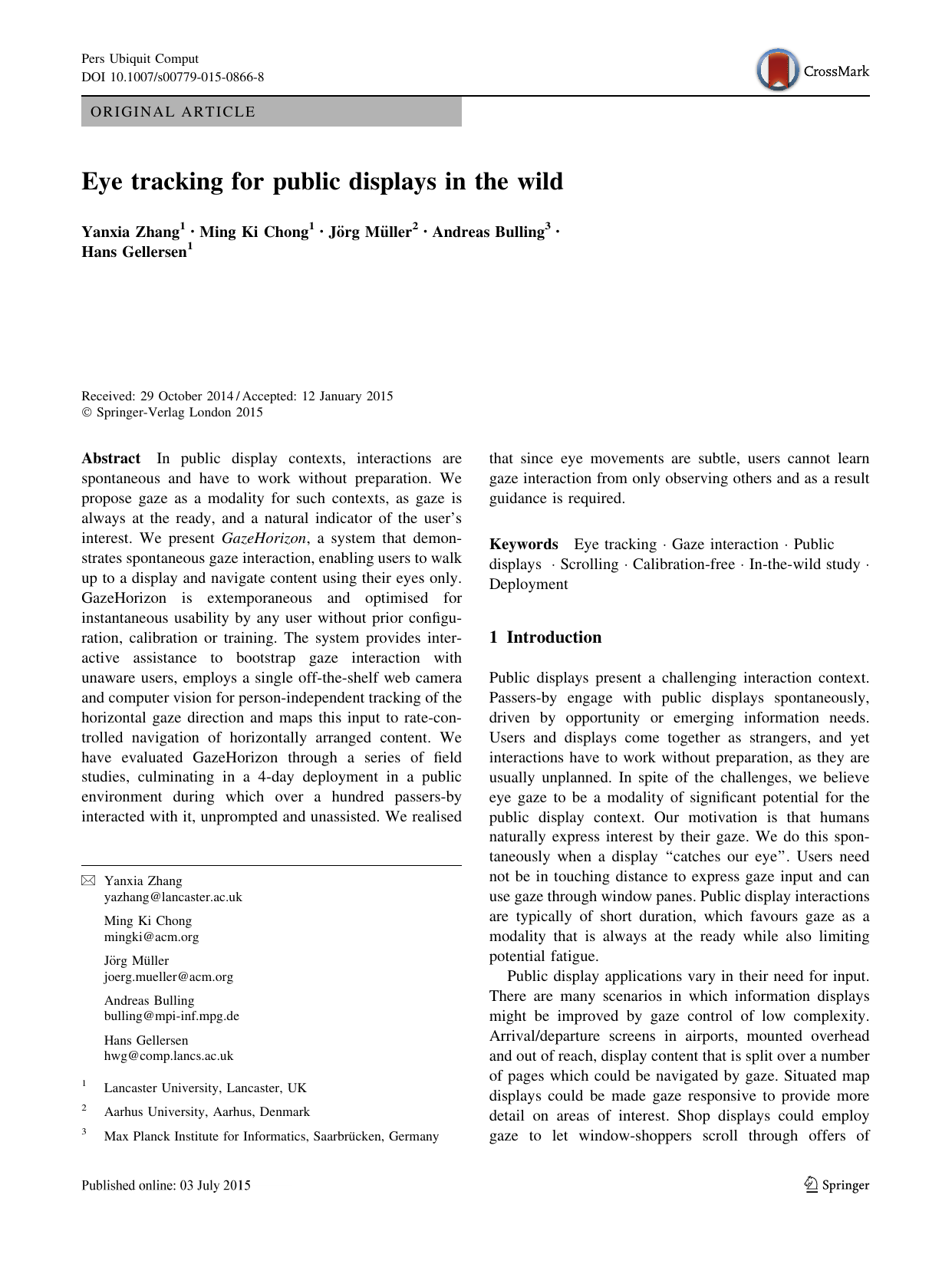

Eye tracking for public displays in the wild

Springer Personal and Ubiquitous Computing, 19(5), pp. 967-981, 2015.

-

Orbits: Enabling Gaze Interaction in Smart Watches using Moving Targets

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 457-466, 2015.

-

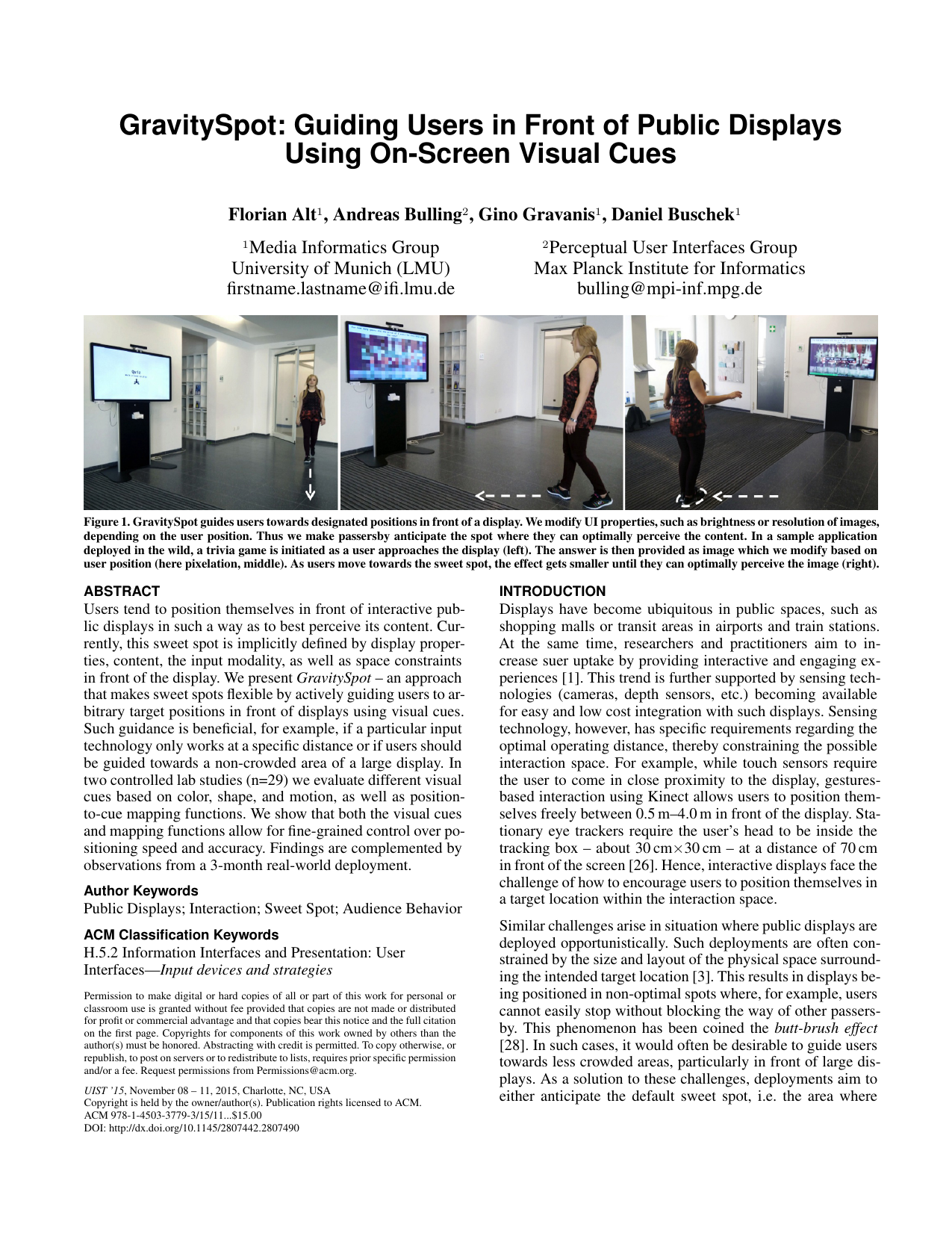

GravitySpot: Guiding Users in Front of Public Displays Using On-Screen Visual Cues

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 47-56, 2015.

-

Gaze+RST: Integrating Gaze and Multitouch for Remote Rotate-Scale-Translate Tasks

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 4179-4188, 2015.

-

Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction

Adj. Proc. ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp), pp. 1151-1160, 2014.

-

GazeHorizon: Enabling Passers-by to Interact with Public Displays by Gaze

Proc. ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp), pp. 559-563, 2014.

-

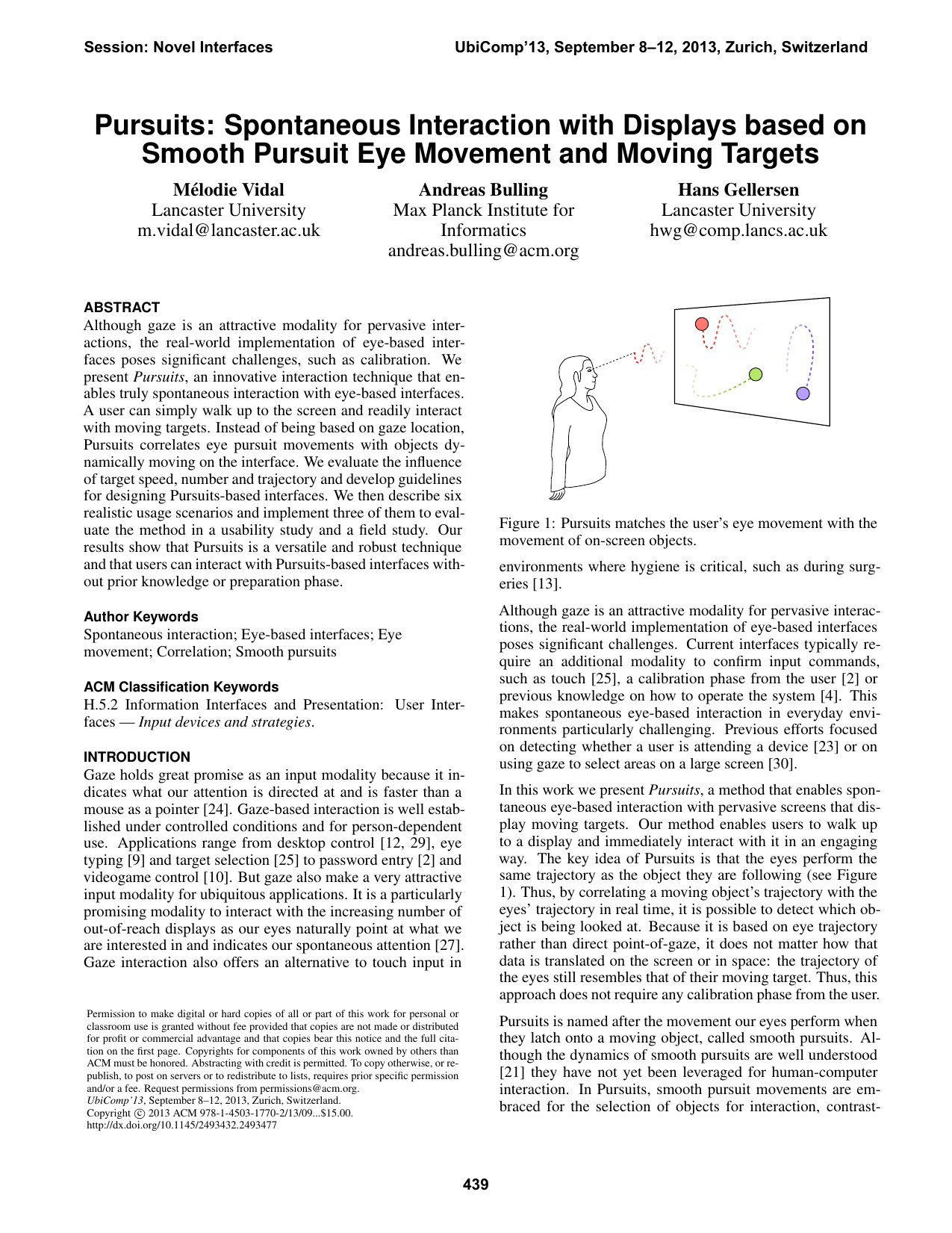

Pursuits: Spontaneous Interaction with Displays based on Smooth Pursuit Eye Movement and Moving Targets

Proc. ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp), pp. 439-448, 2013.

-

Pursuit Calibration: Making Gaze Calibration Less Tedious and More Flexible

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 261-270, 2013.

-

SideWays: A Gaze Interface for Spontaneous Interaction with Situated Displays

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 851-860, 2013.

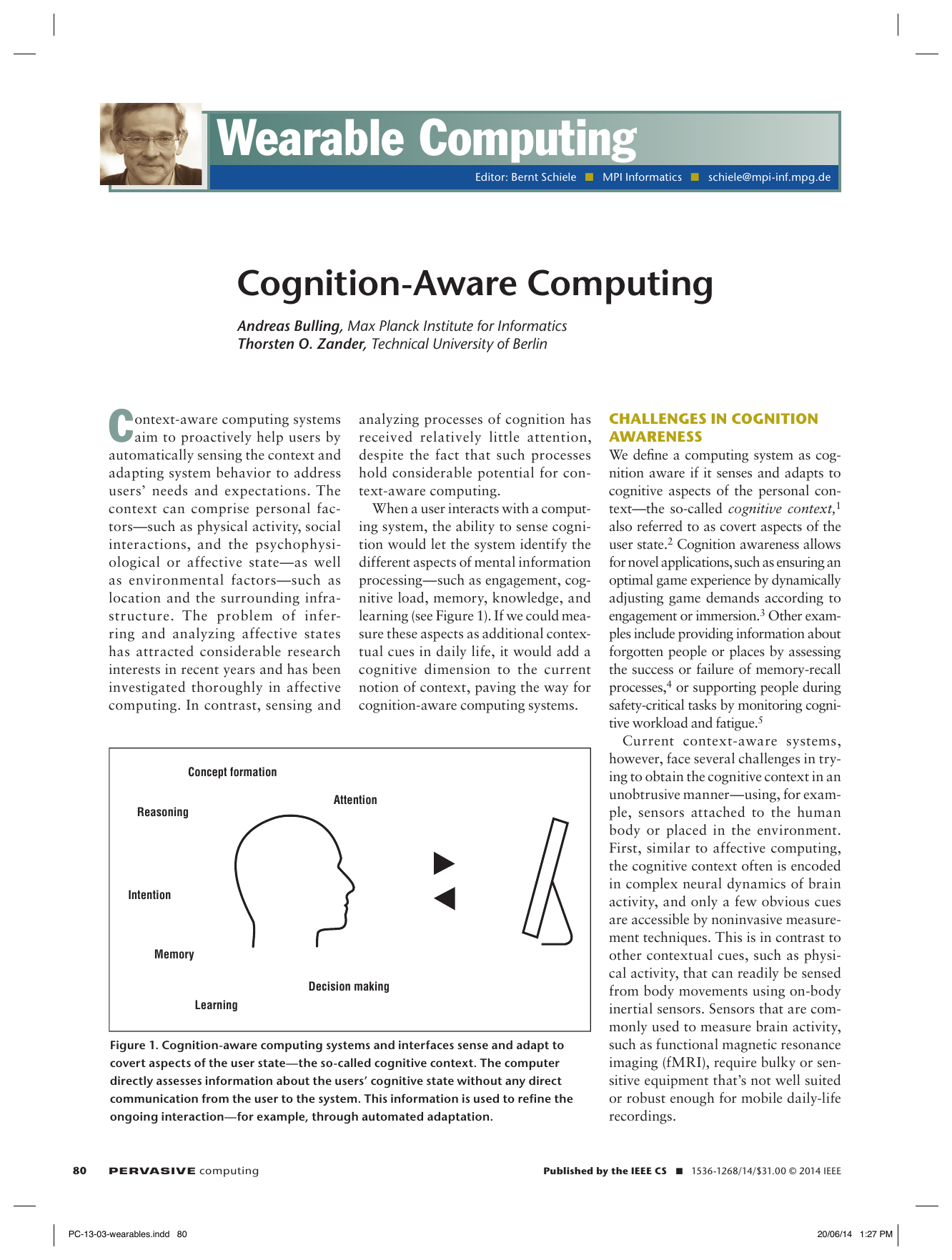

Attentive User Interfaces

-

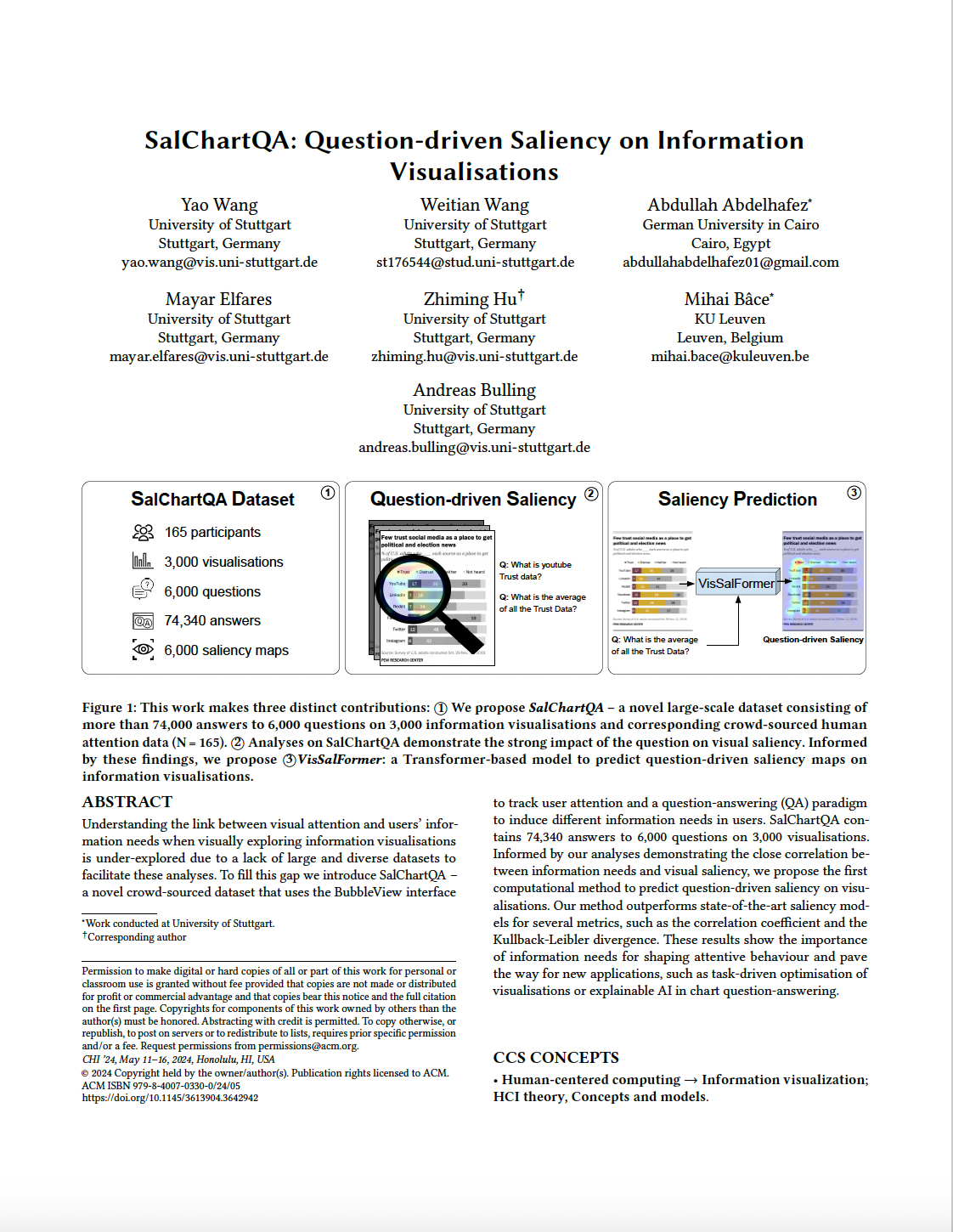

SalChartQA: Question-driven Saliency on Information Visualisations

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–14, 2024.

-

Scanpath Prediction on Information Visualisations

IEEE Transactions on Visualization and Computer Graphics (TVCG), (), pp. 1–15, 2023.

-

Improving Neural Saliency Prediction with a Cognitive Model of Human Visual Attention

Proc. the 45th Annual Meeting of the Cognitive Science Society (CogSci), pp. 3639–3646, 2023.

-

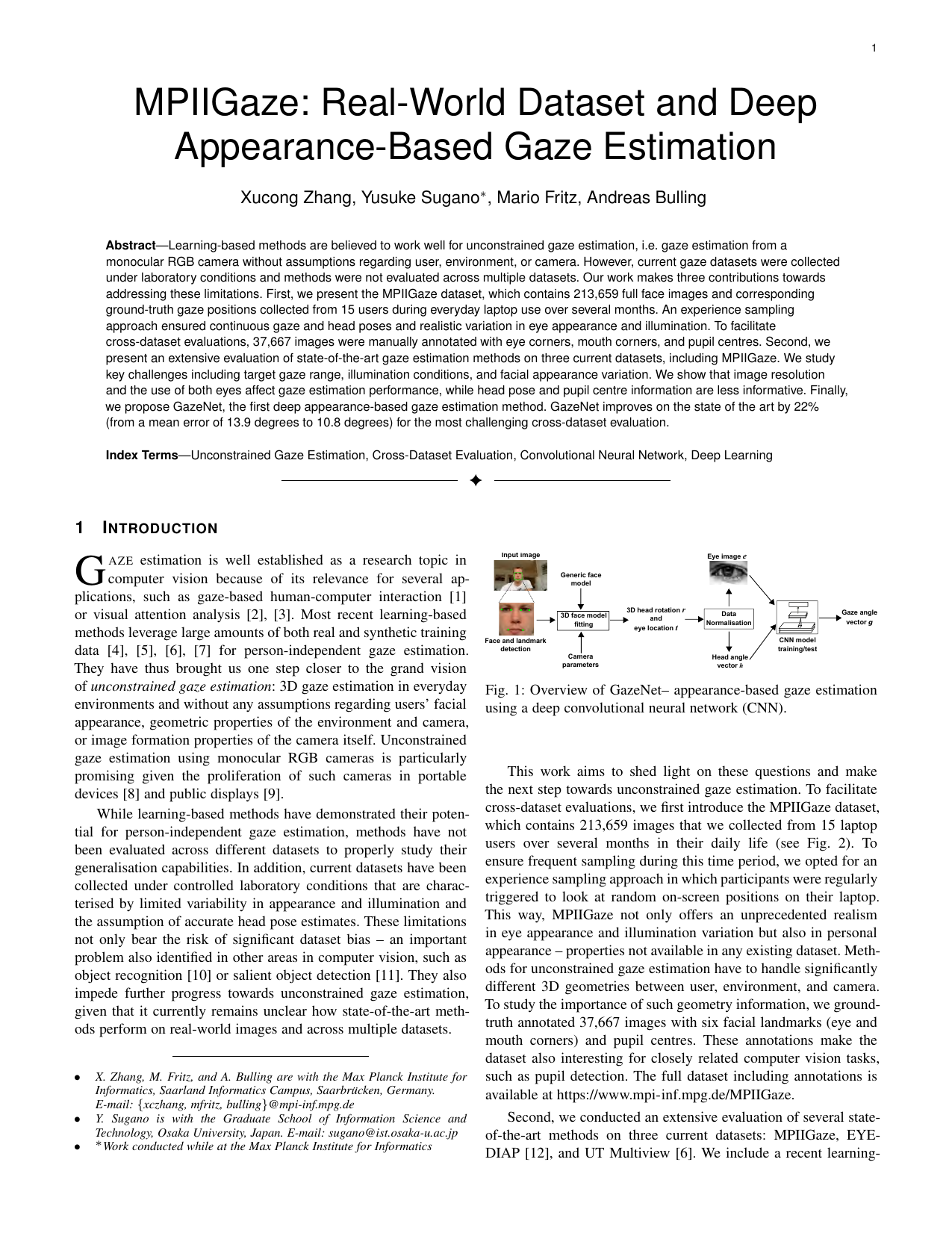

MPIIGaze: Real-World Dataset and Deep Appearance-Based Gaze Estimation

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 41(1), pp. 162-175, 2019.

-

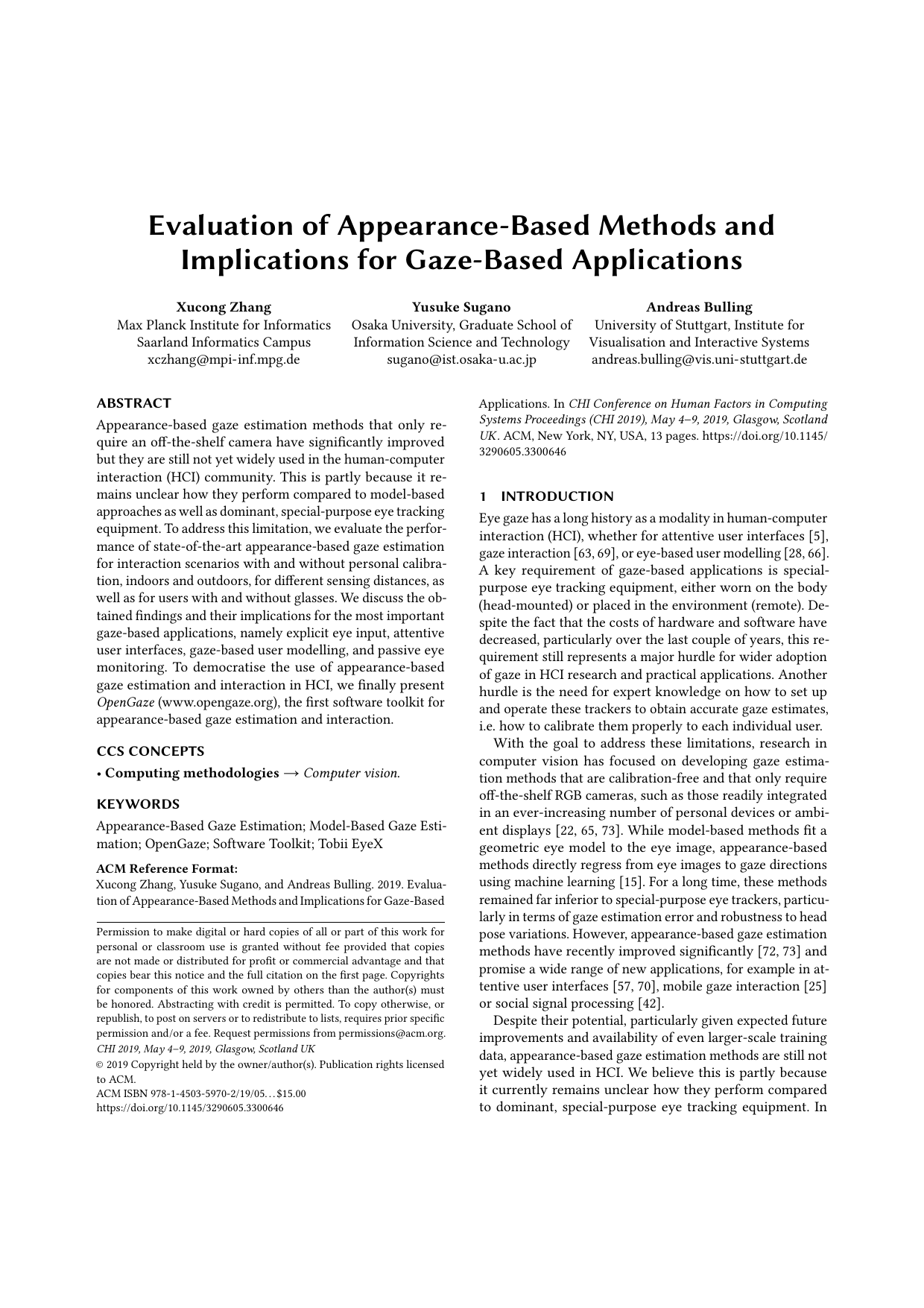

Evaluation of Appearance-Based Methods and Implications for Gaze-Based Applications

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–13, 2019.

-

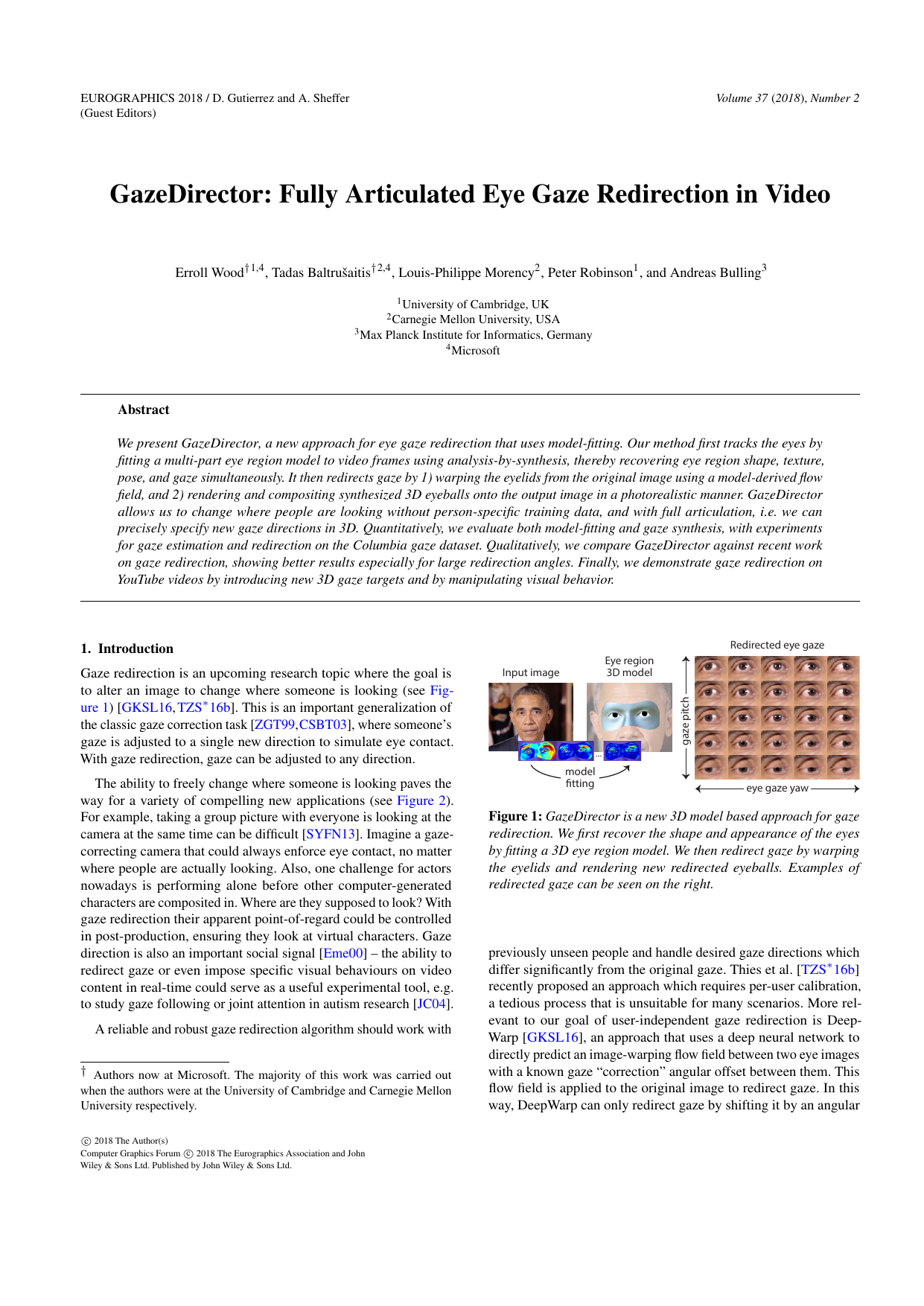

GazeDirector: Fully Articulated Eye Gaze Redirection in Video

Computer Graphics Forum (CGF), 37(2), pp. 217-225, 2018.

-

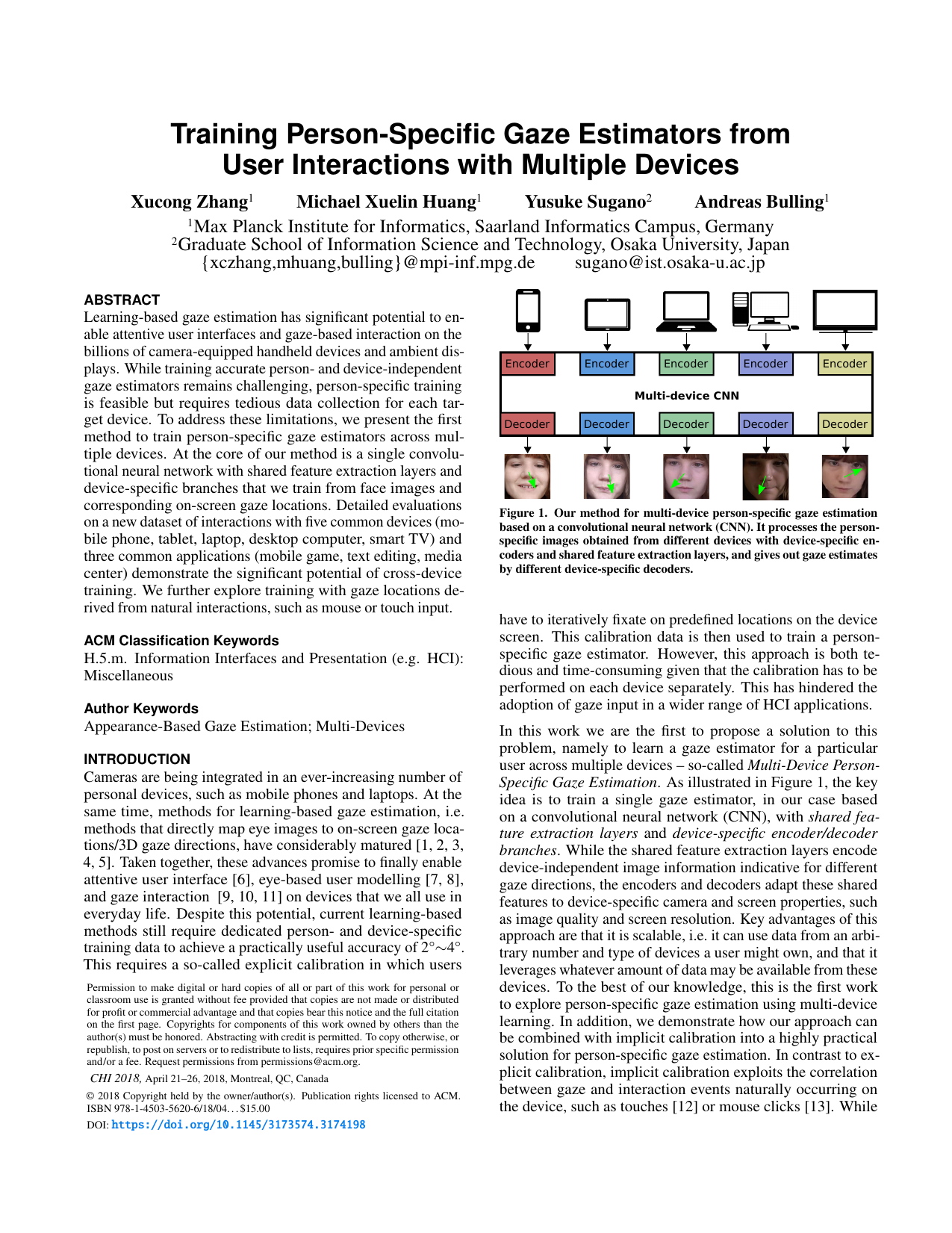

Training Person-Specific Gaze Estimators from Interactions with Multiple Devices

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–12, 2018.

-

Forecasting User Attention During Everyday Mobile Interactions Using Device-Integrated and Wearable Sensors

Proc. ACM International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI), pp. 1–13, 2018.

-

Error-Aware Gaze-Based Interfaces for Robust Mobile Gaze Interaction

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–10, 2018.

-

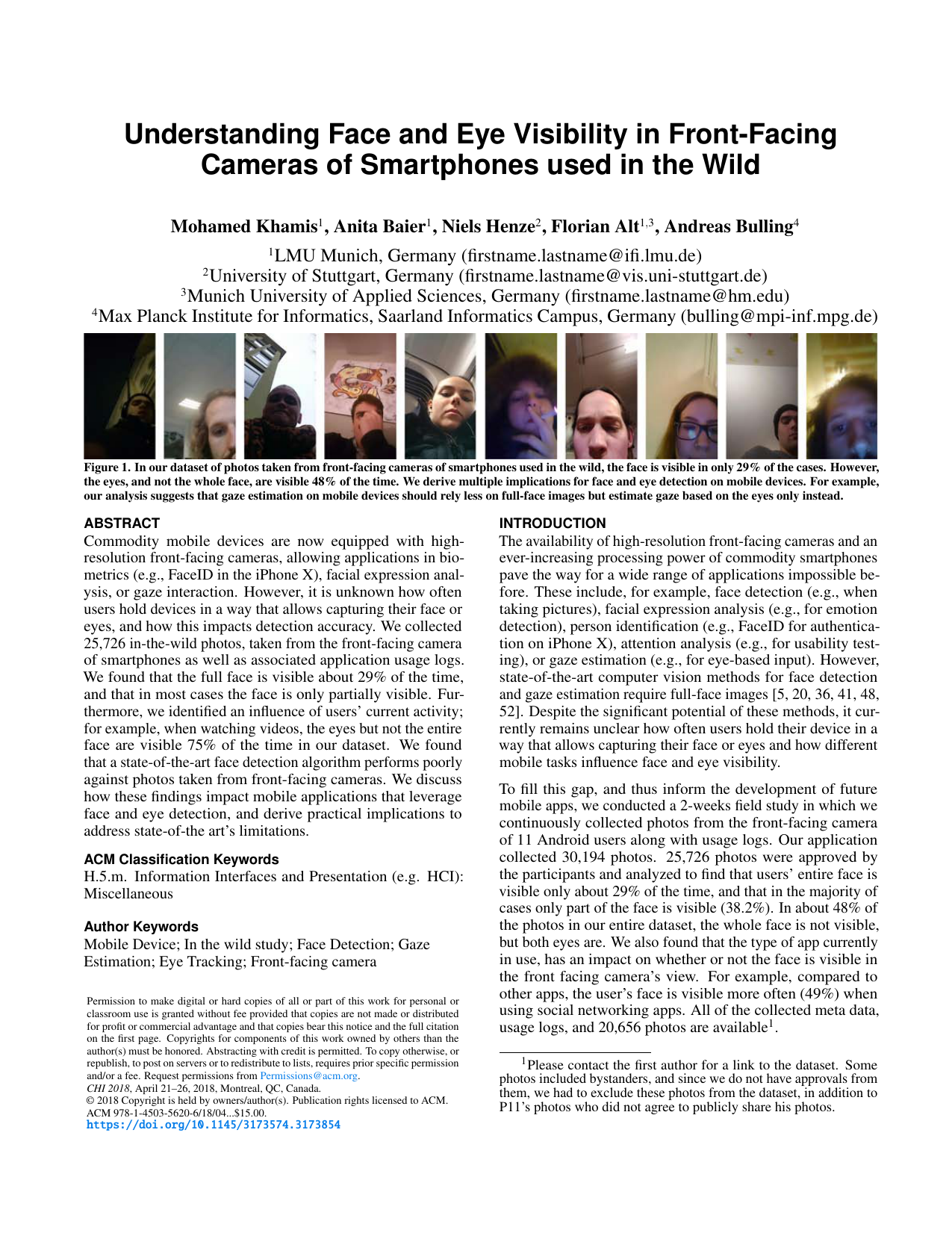

Understanding Face and Eye Visibility in Front-Facing Cameras of Smartphones used in the Wild

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–12, 2018.

-

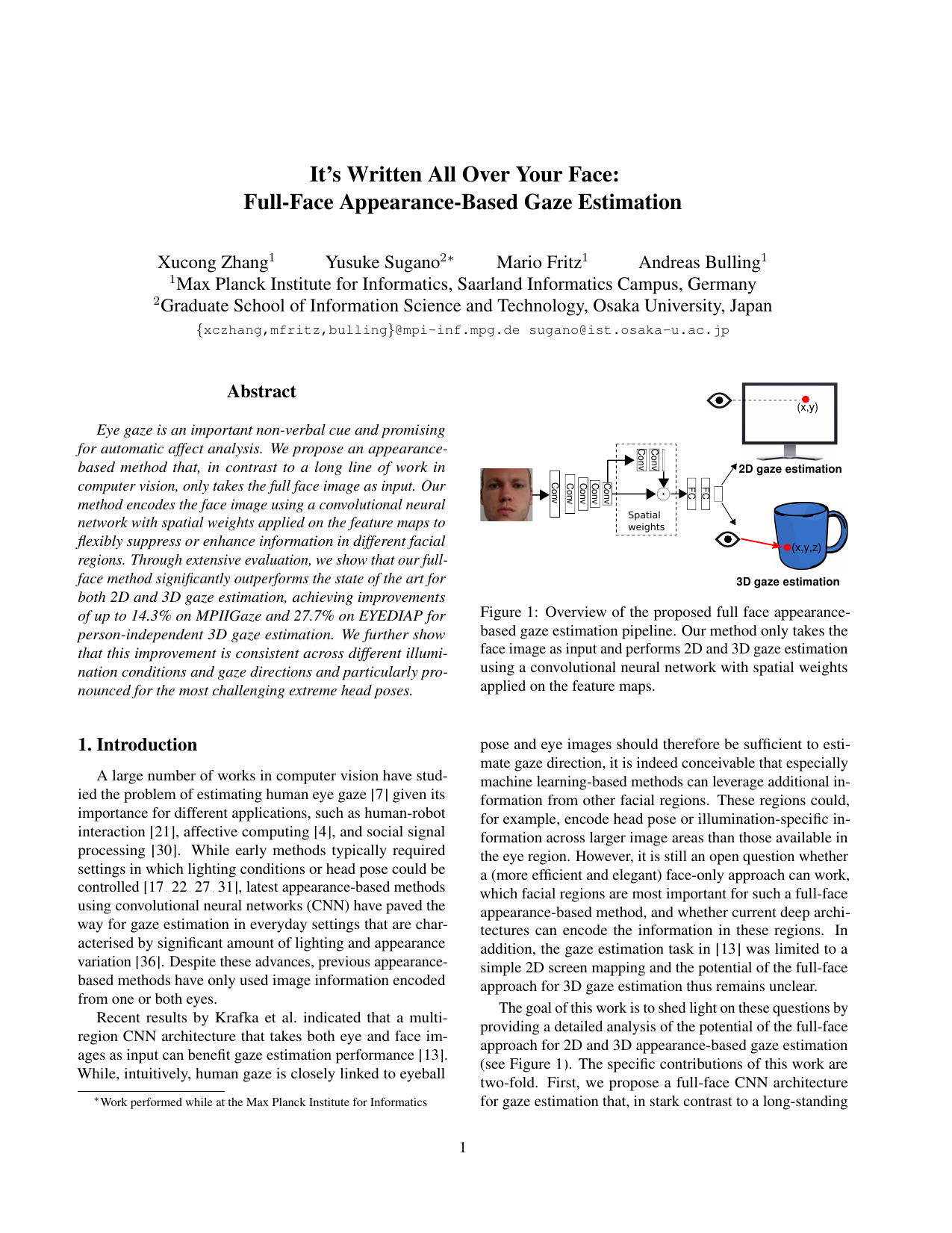

It’s Written All Over Your Face: Full-Face Appearance-Based Gaze Estimation

Proc. IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 2299-2308, 2017.

-

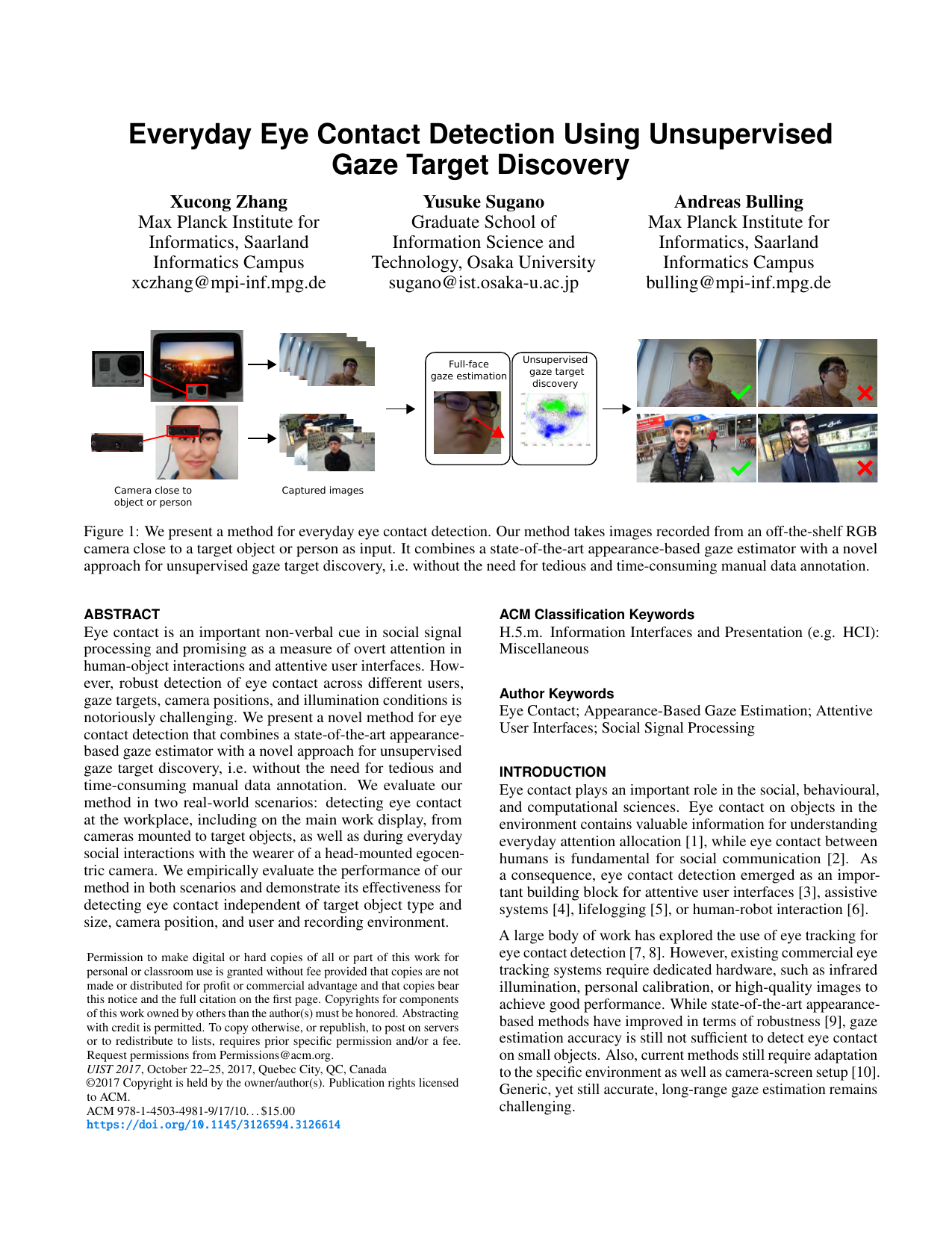

Everyday Eye Contact Detection Using Unsupervised Gaze Target Discovery

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 193-203, 2017.

-

Learning an appearance-based gaze estimator from one million synthesised images

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 131–138, 2016.

-

A 3D Morphable Eye Region Model for Gaze Estimation

Proc. European Conference on Computer Vision (ECCV), pp. 297-313, 2016.

-

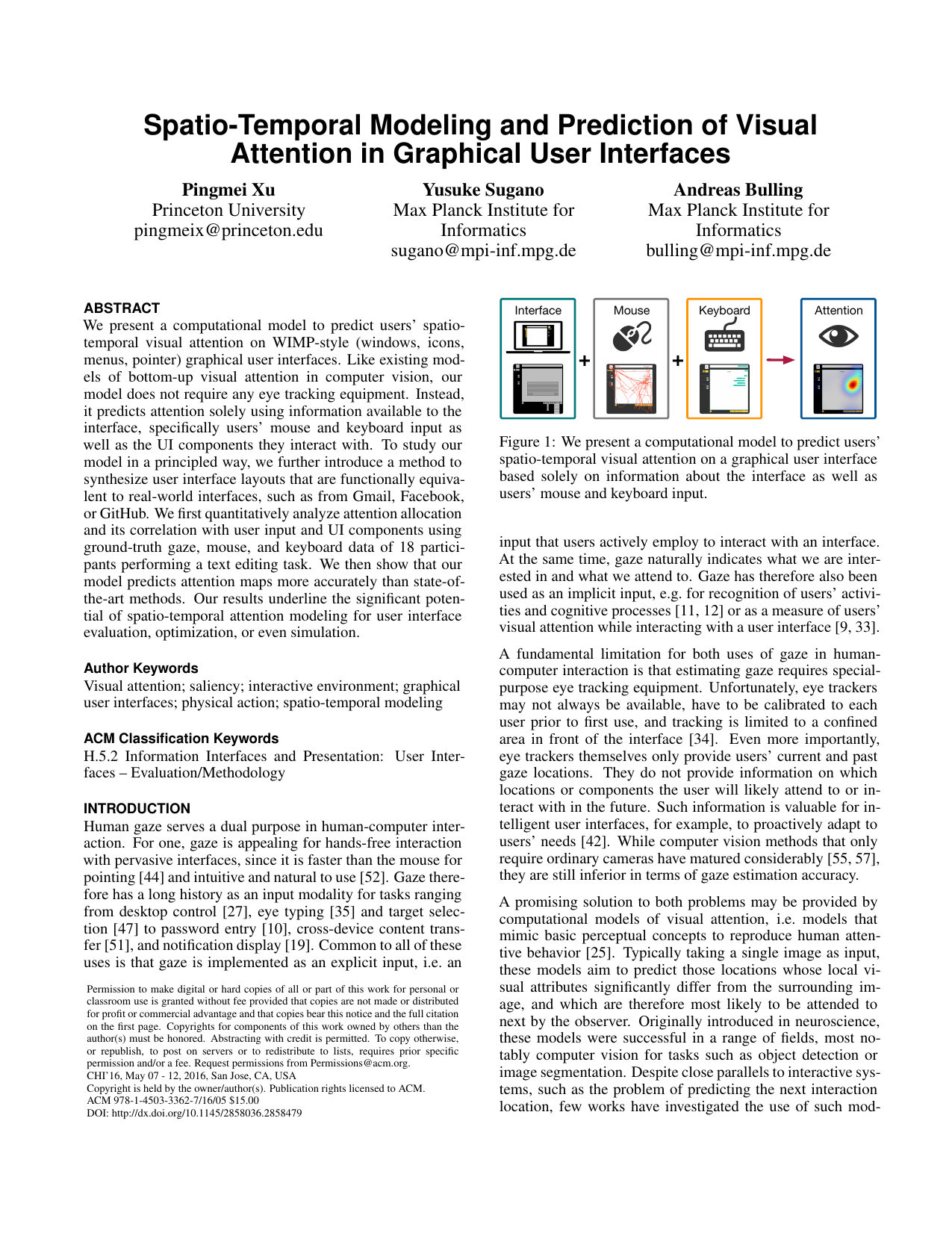

Spatio-Temporal Modeling and Prediction of Visual Attention in Graphical User Interfaces

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 3299-3310, 2016.

-

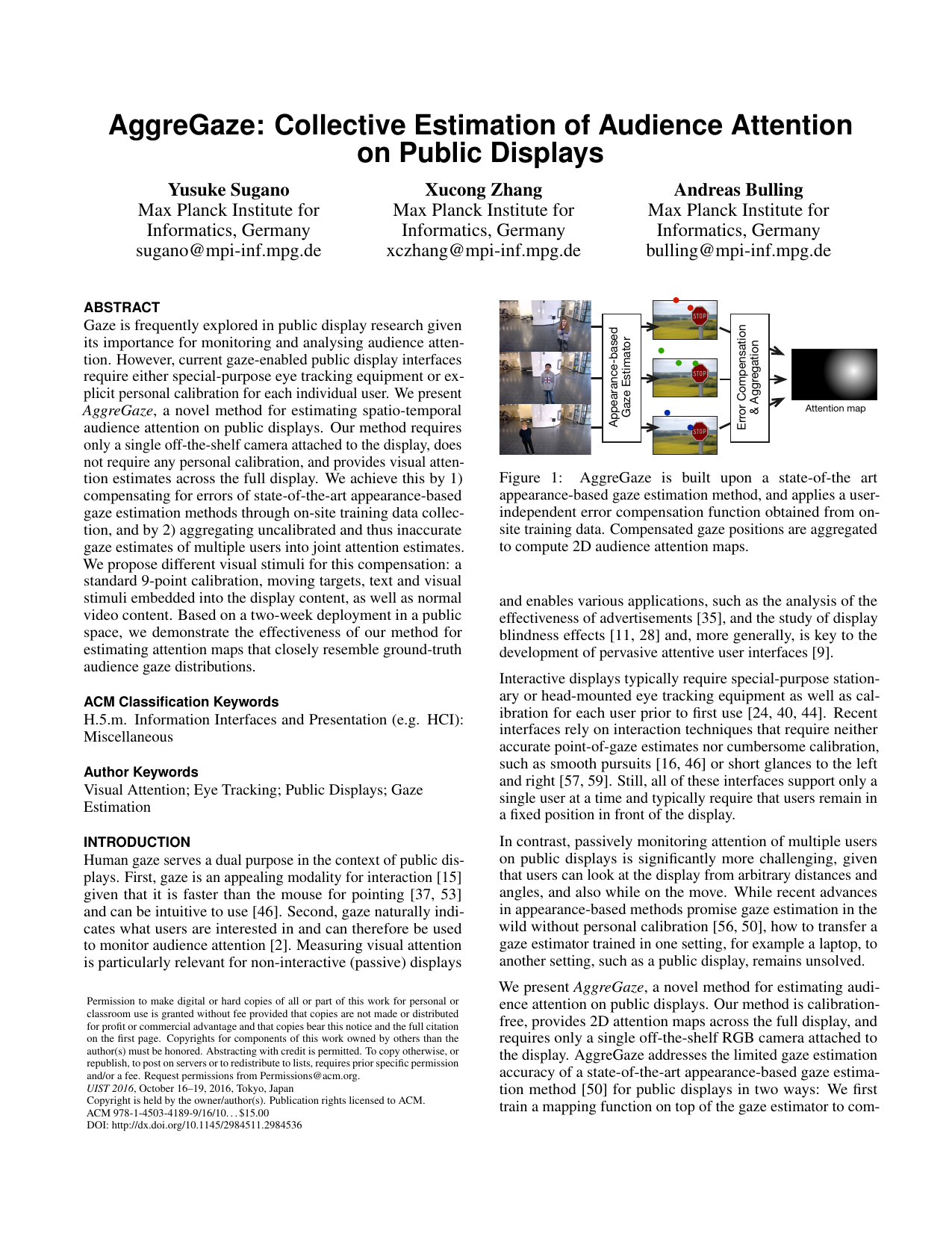

AggreGaze: Collective Estimation of Audience Attention on Public Displays

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 821-831, 2016.

-

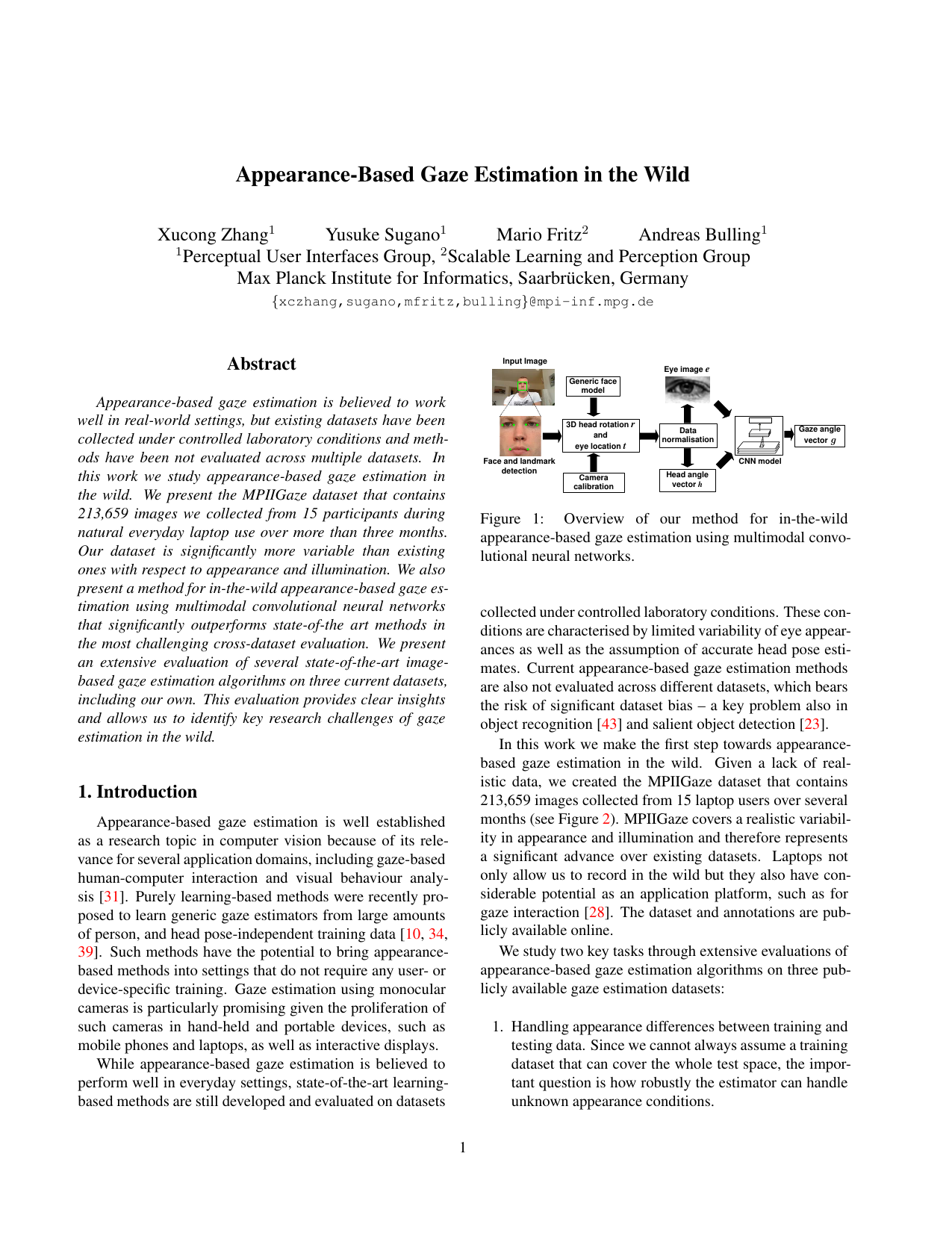

Appearance-based Gaze Estimation in the Wild

Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4511-4520, 2015.

-

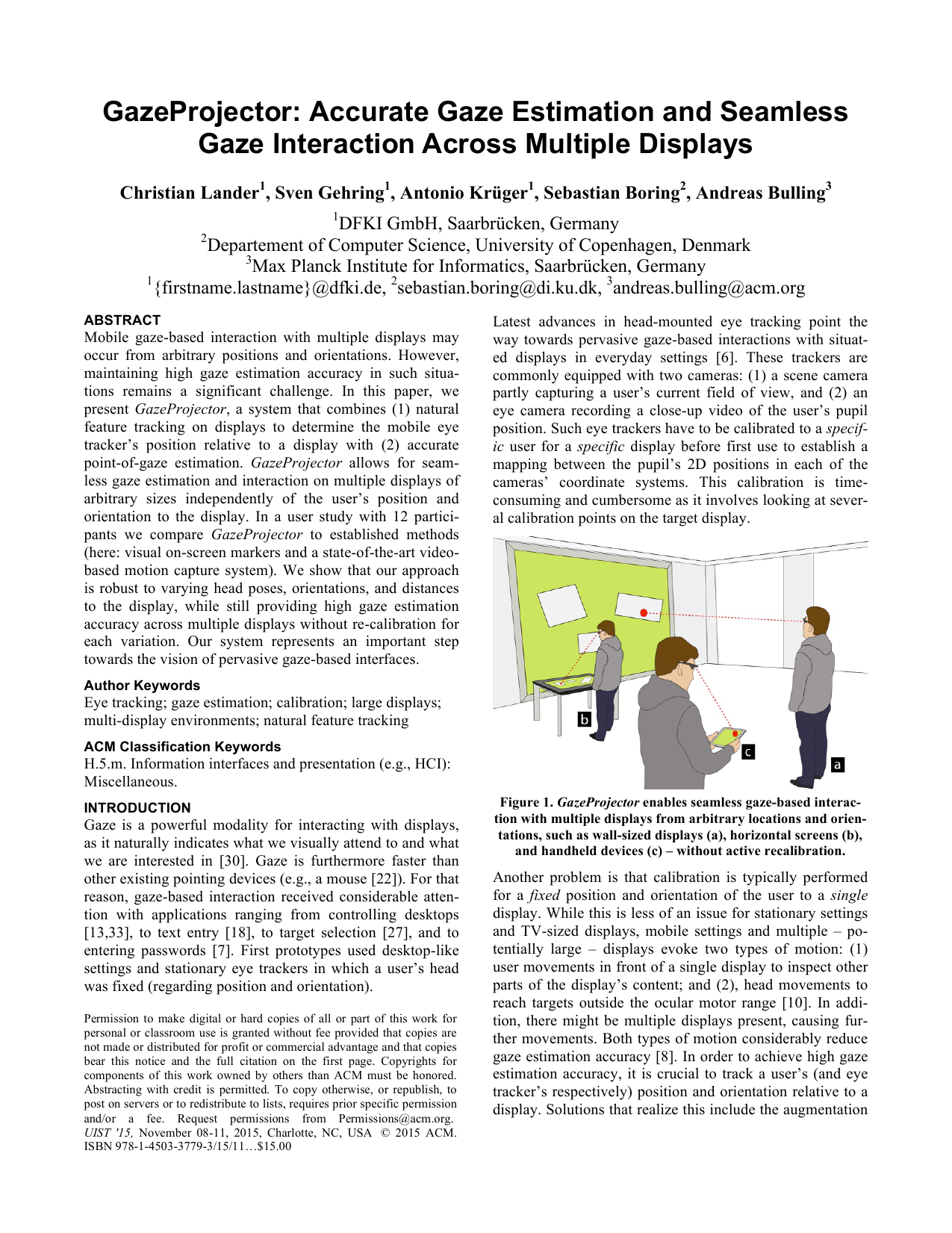

GazeProjector: Accurate Gaze Estimation and Seamless Gaze Interaction Across Multiple Displays

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 395-404, 2015.

-

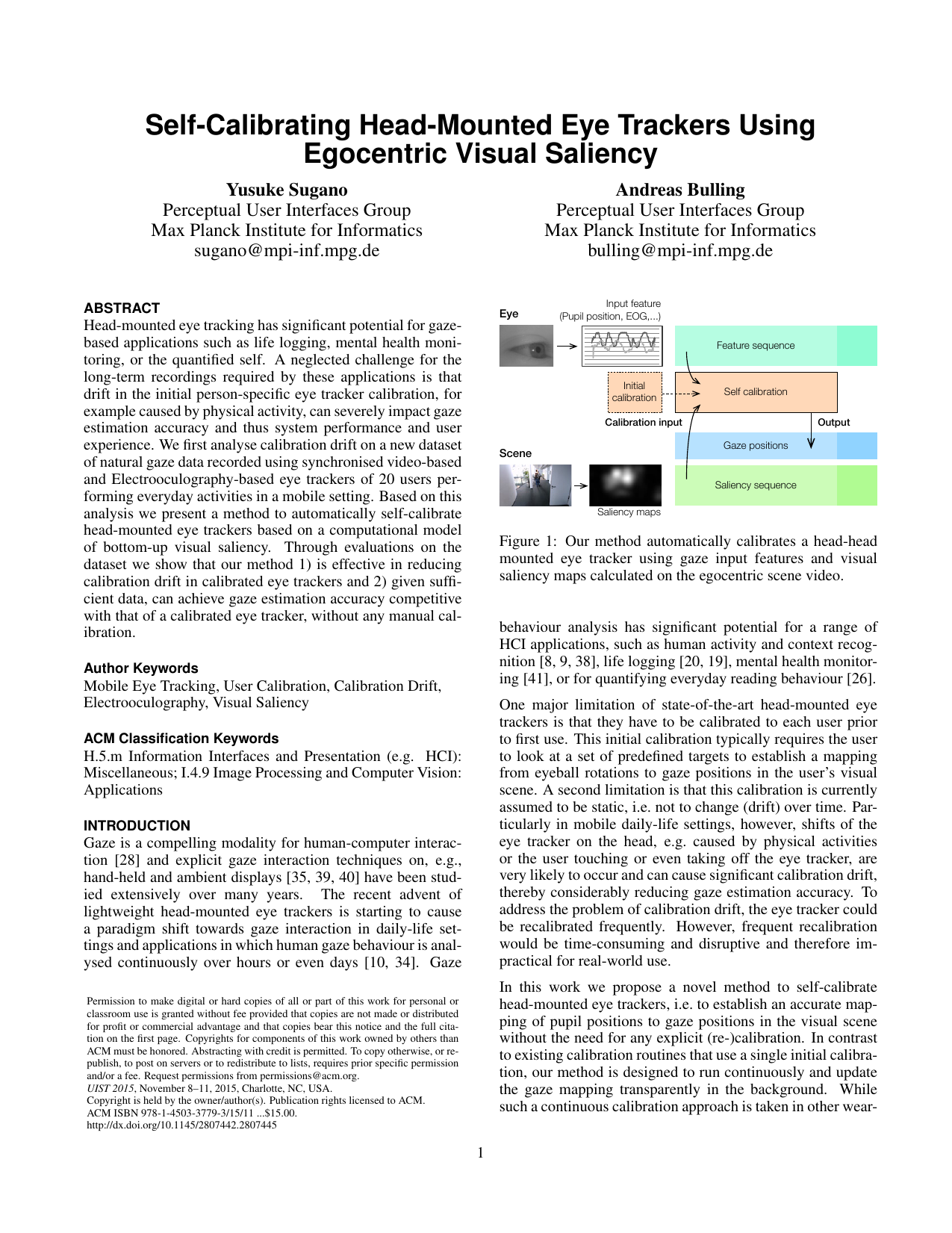

Self-Calibrating Head-Mounted Eye Trackers Using Egocentric Visual Saliency

Proc. ACM Symposium on User Interface Software and Technology (UIST), pp. 363-372, 2015.

-

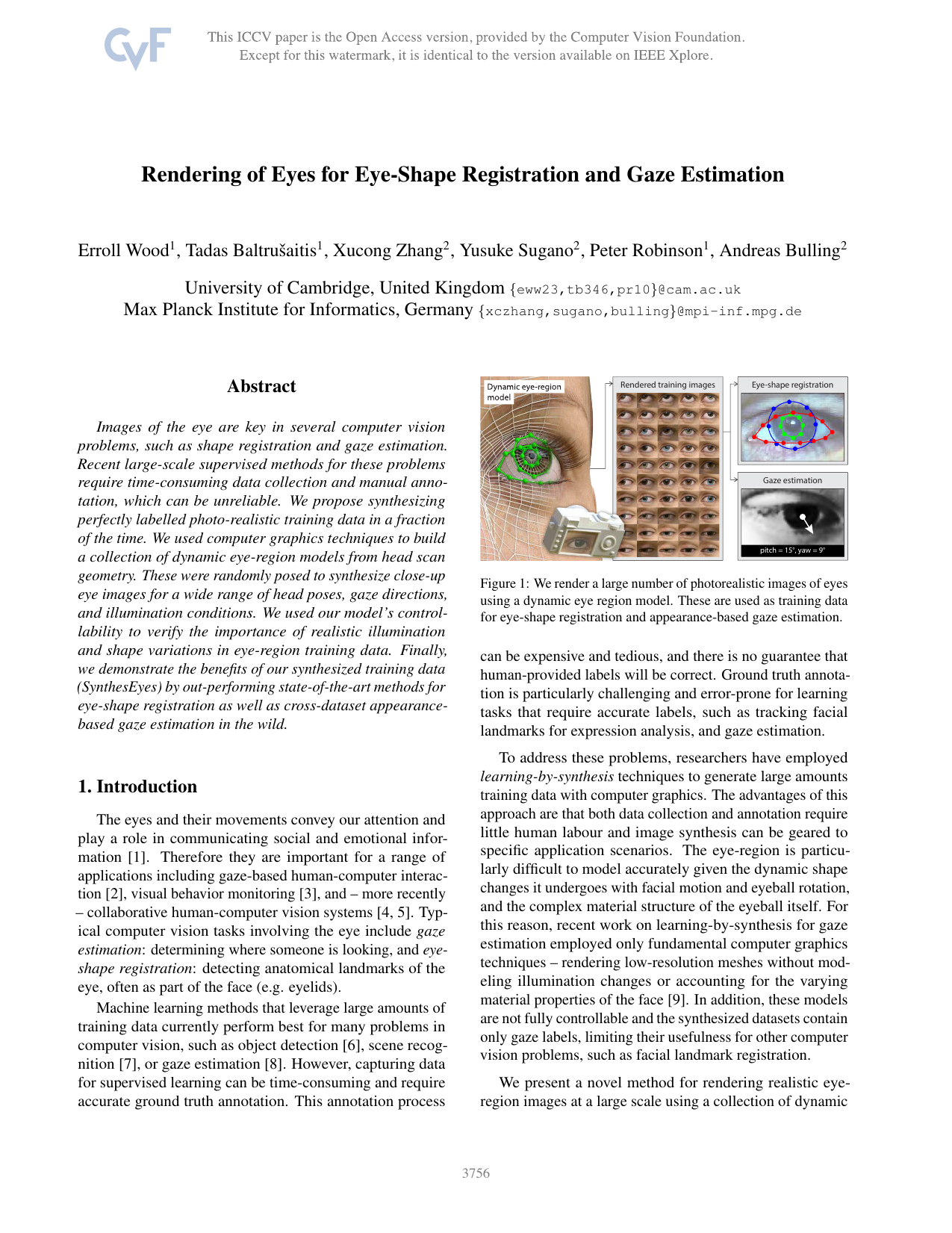

Rendering of Eyes for Eye-Shape Registration and Gaze Estimation

Proc. IEEE International Conference on Computer Vision (ICCV), pp. 3756-3764, 2015.

-

Eye Tracking and Eye-Based Human-Computer Interaction

Stephen H. Fairclough, Kiel Gilleade (Eds.): Advances in Physiological Computing, Springer Publishing London, pp. 39-65, 2014.

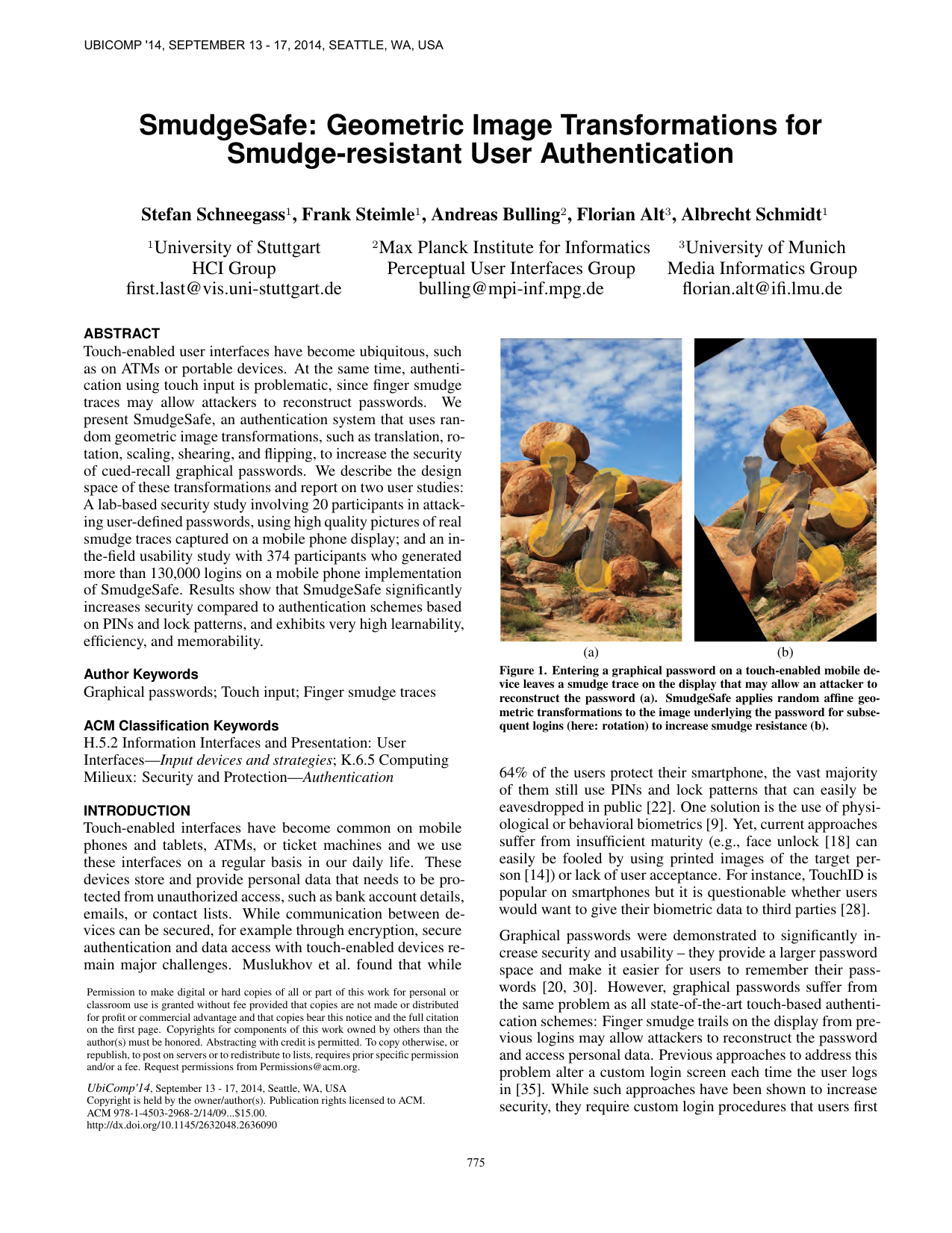

Usable Security and Privacy

-

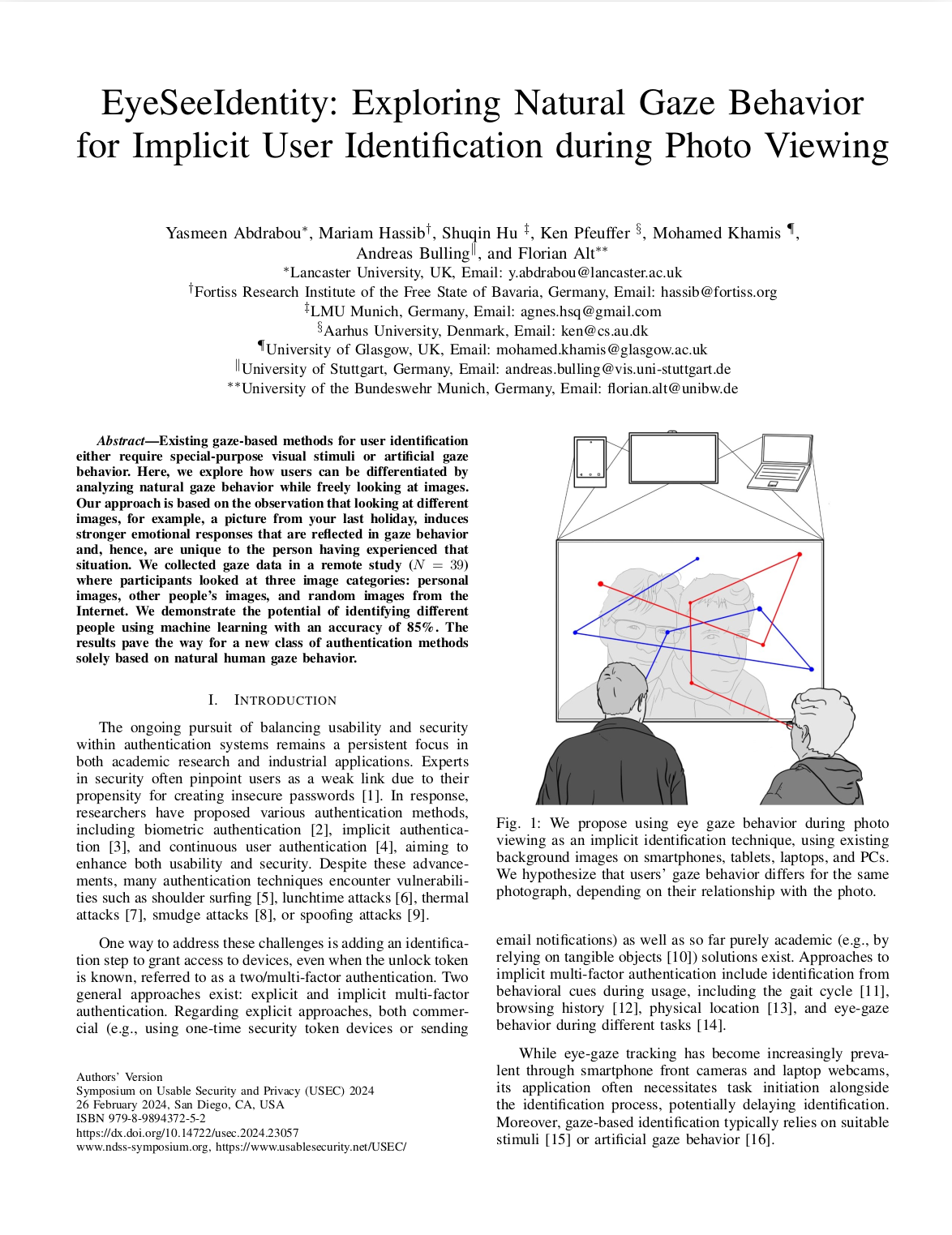

EyeSeeIdentity: Exploring Natural Gaze Behaviour for Implicit User Identification during Photo Viewing

Proc. Symposium on Usable Security and Privacy (USEC), pp. 1–12, 2024.

-

Privacy-Aware Eye Tracking: Challenges and Future Directions

IEEE Pervasive Computing, 22(1), pp. 95-102, 2023.

-

Federated Learning for Appearance-based Gaze Estimation in the Wild

Proceedings of The 1st Gaze Meets ML workshop, PMLR, pp. 20–36, 2023.

-

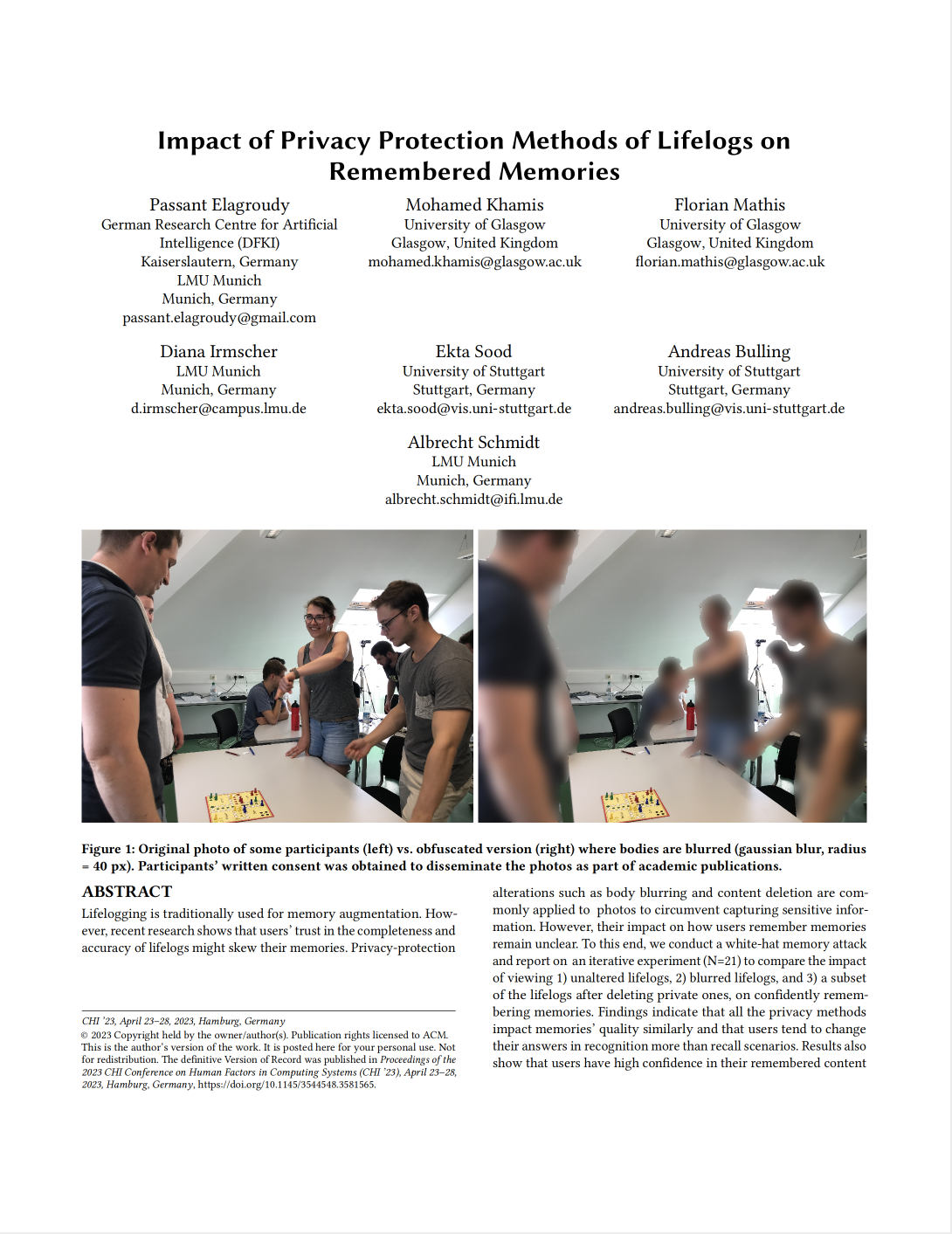

Impact of Privacy Protection Methods of Lifelogs on Remembered Memories

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–10, 2023.

-

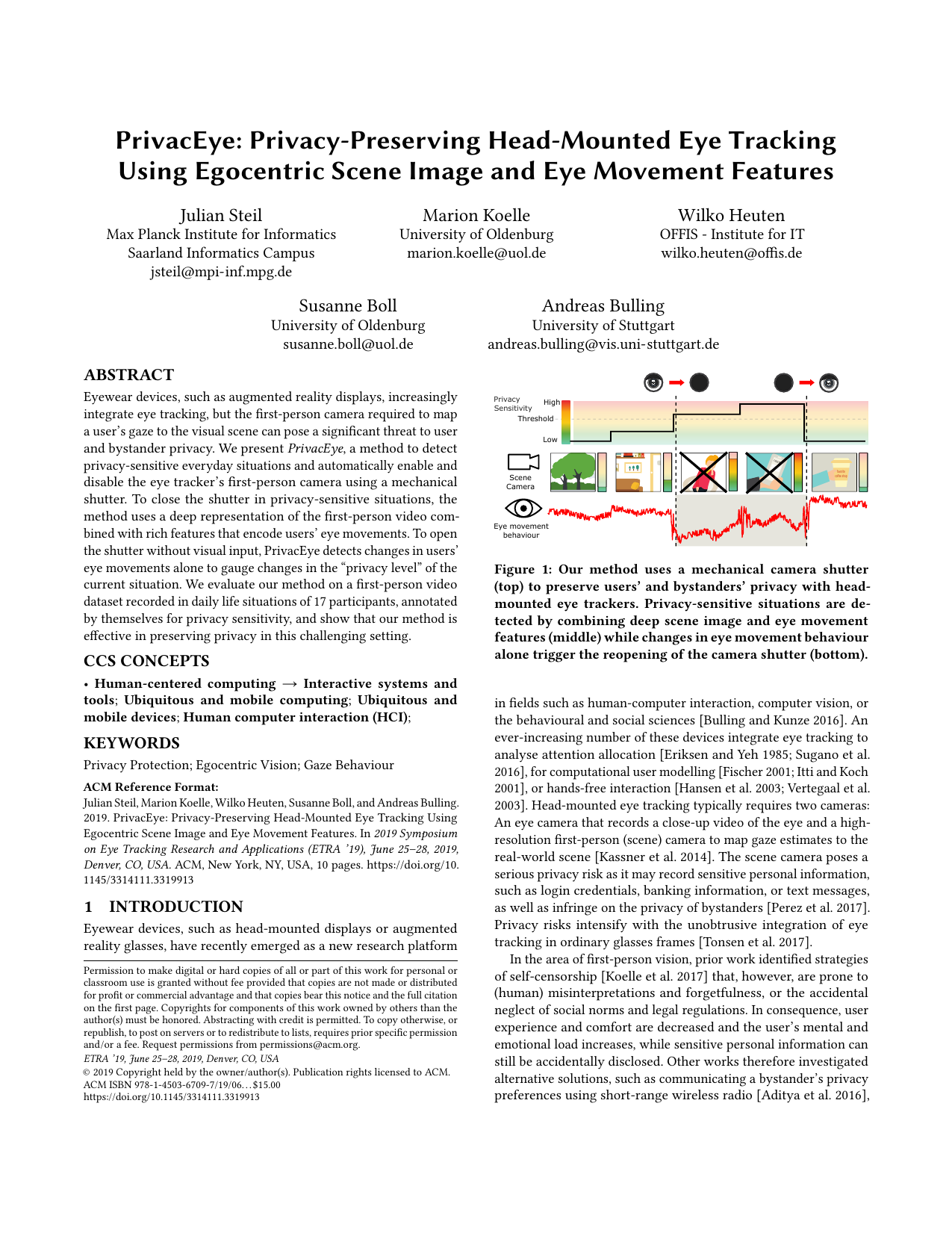

PrivacEye: Privacy-Preserving Head-Mounted Eye Tracking Using Egocentric Scene Image and Eye Movement Features

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–10, 2019.

-

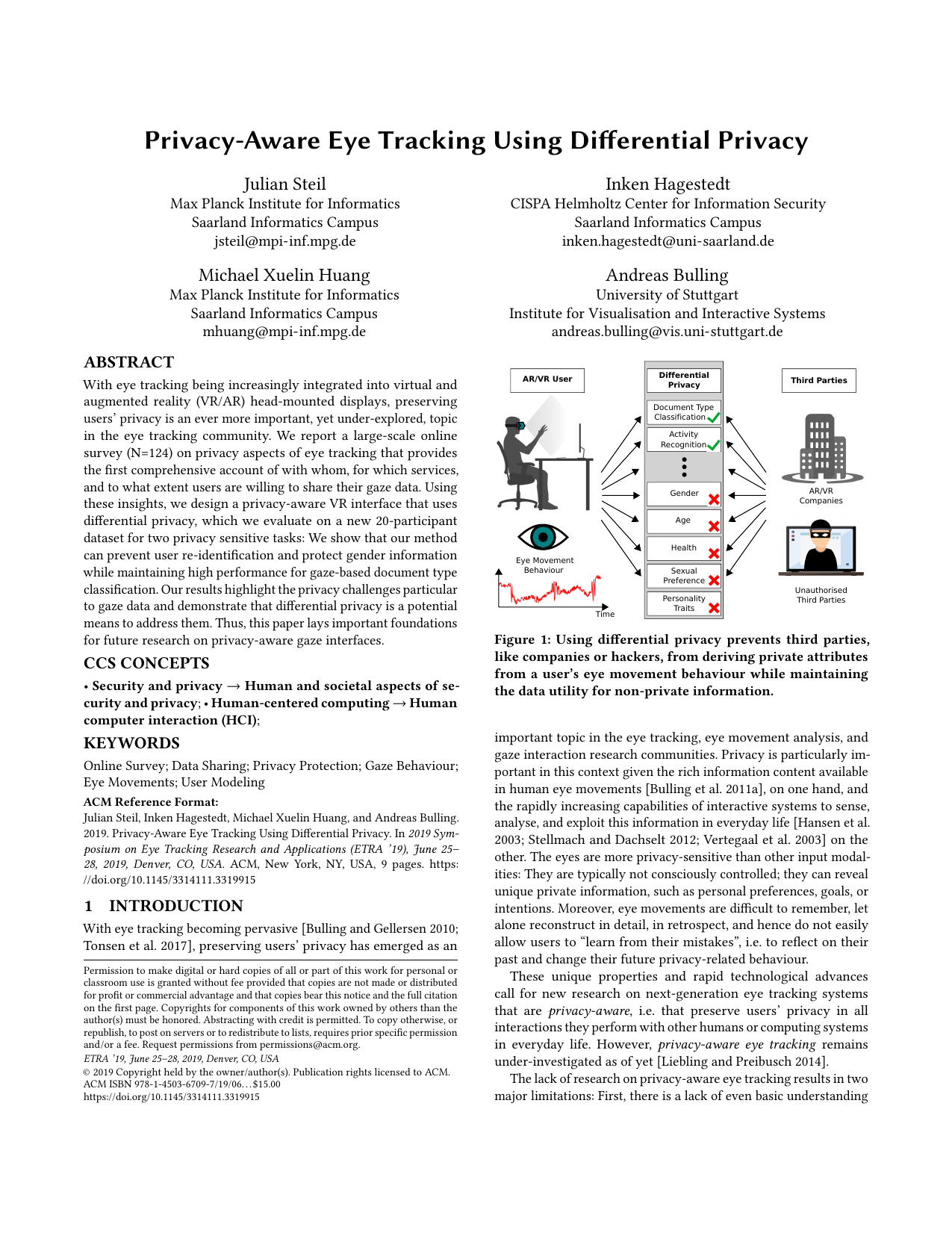

Privacy-Aware Eye Tracking Using Differential Privacy

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–9, 2019.

-

CueAuth: Comparing Touch, Mid-Air Gestures, and Gaze for Cue-based Authentication on Situated Displays

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), 2(7), pp. 1–22, 2018.

-

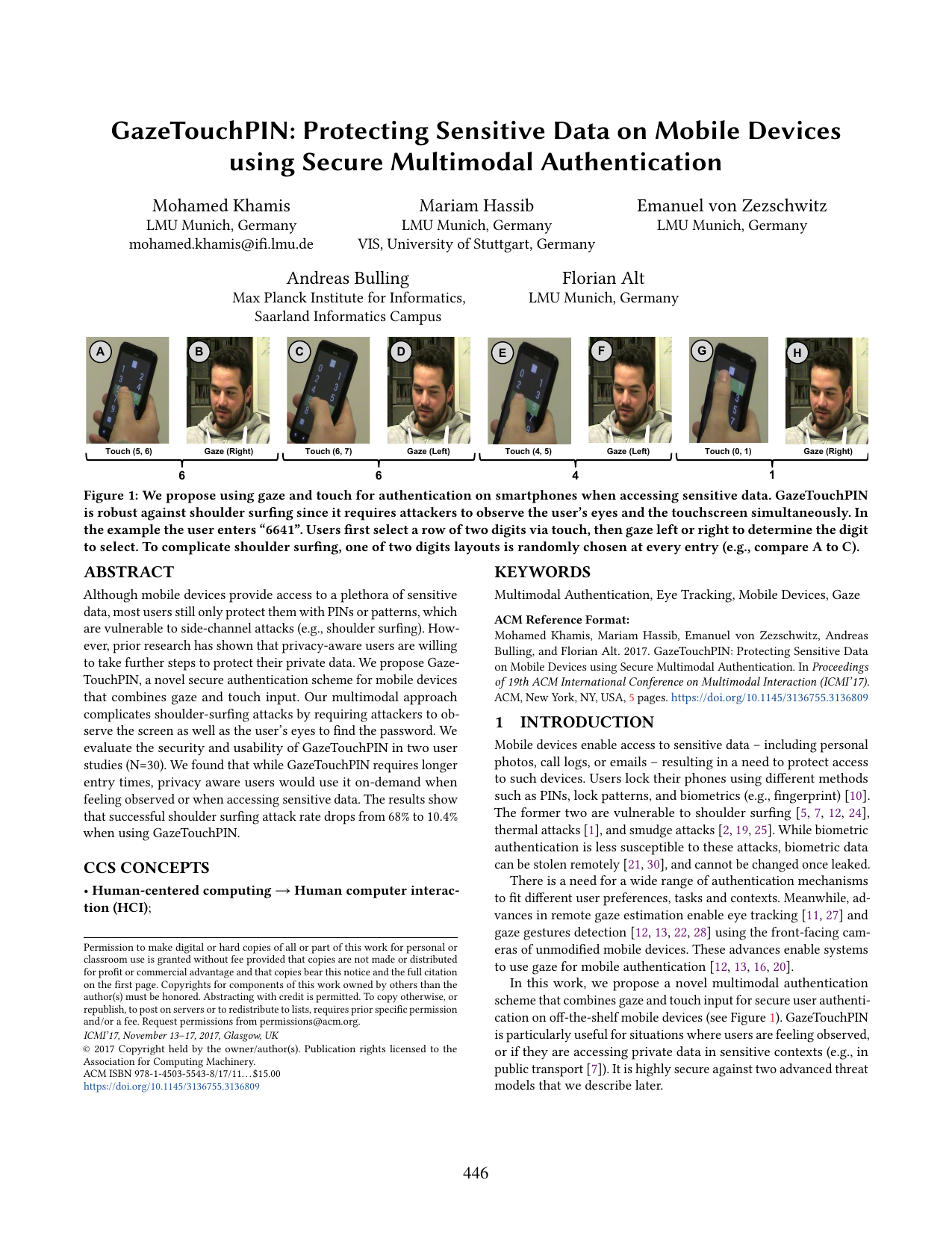

GazeTouchPIN: Protecting Sensitive Data on Mobile Devices using Secure Multimodal Authentication

Proc. ACM International Conference on Multimodal Interaction (ICMI), pp. 446-450, 2017.

-

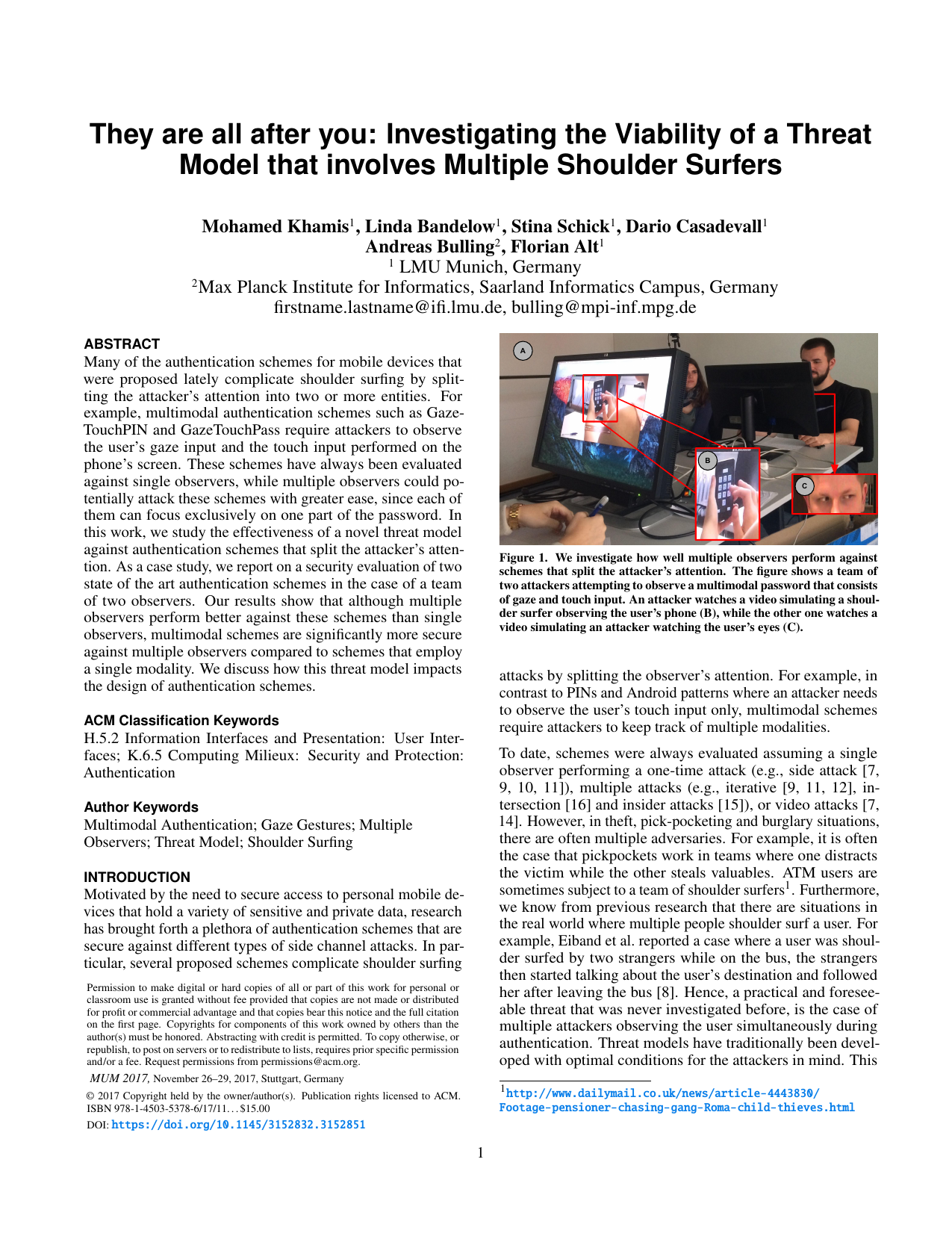

They are all after you: Investigating the Viability of a Threat Model that involves Multiple Shoulder Surfers

Proc. International Conference on Mobile and Ubiquitous Multimedia (MUM), pp. 1–5, 2017.

-

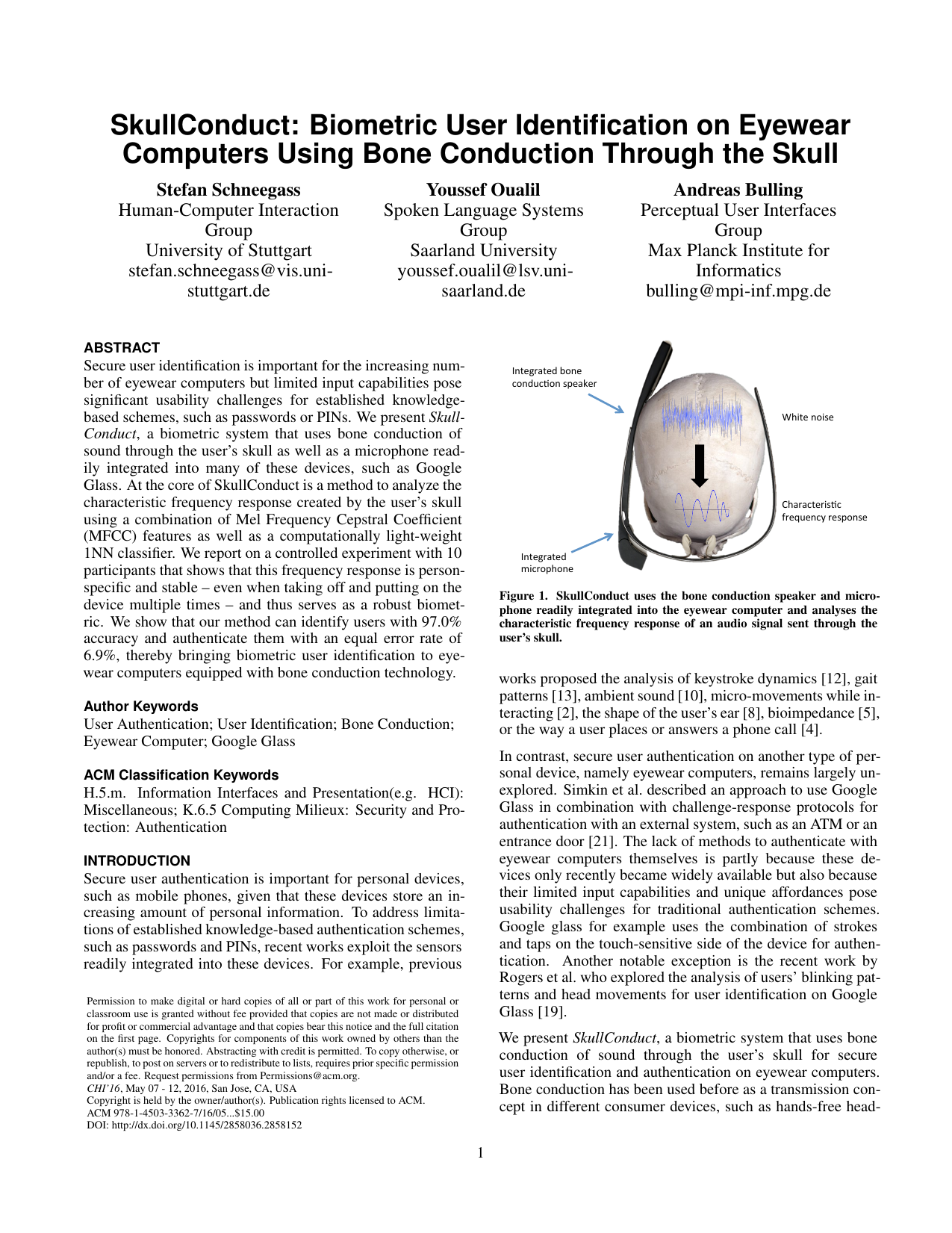

SkullConduct: Biometric User Identification on Eyewear Computers Using Bone Conduction Through the Skull

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1379-1384, 2016.

-

GazeTouchPass: Multimodal Authentication Using Gaze and Touch on Mobile Devices

Ext. Abstr. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 2156-2164, 2016.

-

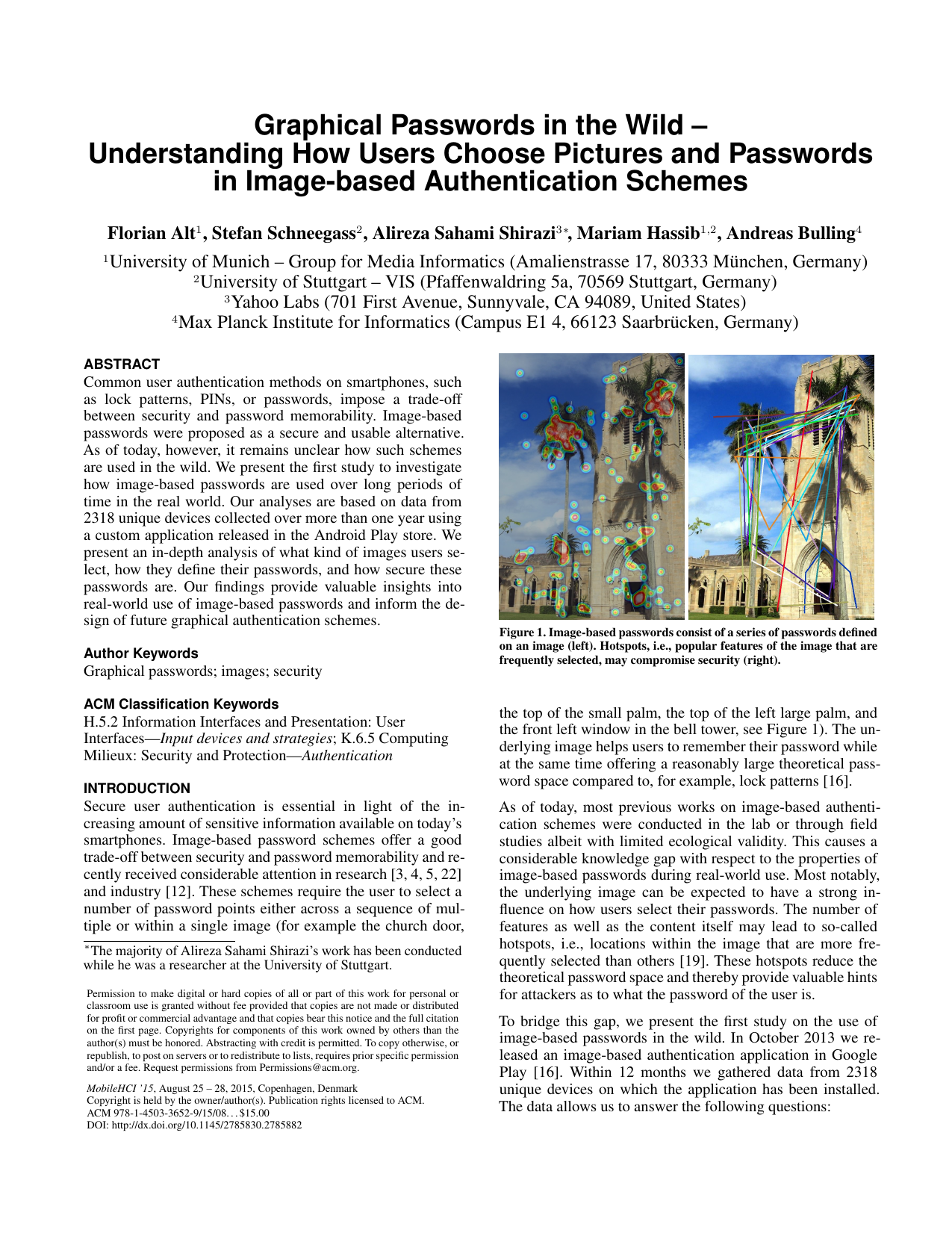

Graphical Passwords in the Wild – Understanding How Users Choose Pictures and Passwords in Image-based Authentication Schemes

Proc. ACM International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI), pp. 316-322, 2015.