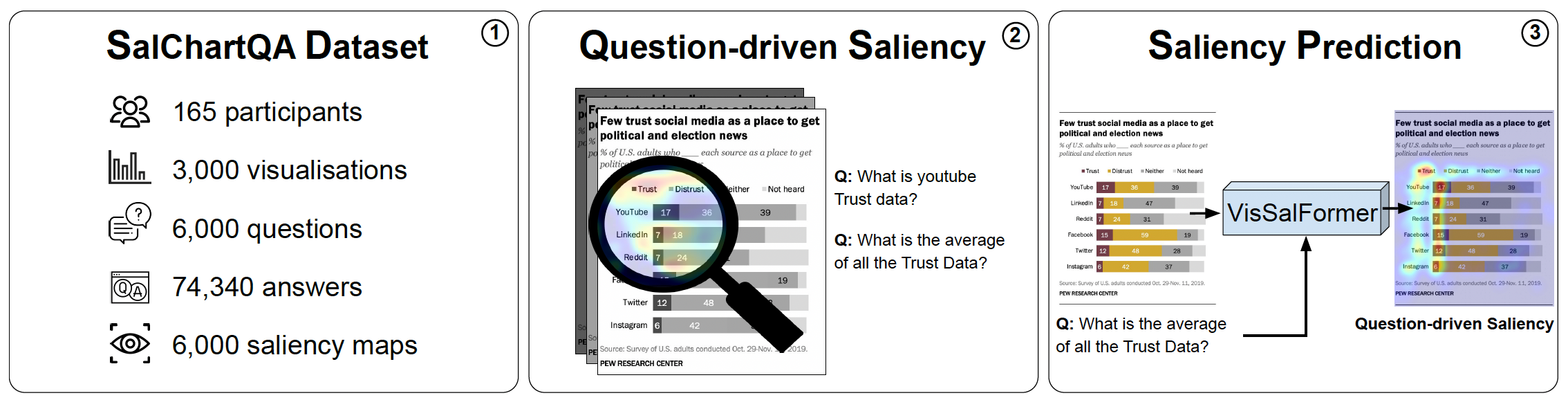

SalChartQA: Question-driven Saliency on Information Visualisations

Yao Wang, Weitian Wang, Abdullah Abdelhafez, Mayar Elfares, Zhiming Hu, Mihai Bâce, Andreas Bulling

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–14, 2024.

Abstract

Understanding the link between visual attention and user’s needs when visually exploring information visualisations is under-explored due to a lack of large and diverse datasets to facilitate these analyses. To fill this gap, we introduce SalChartQA – a novel crowd-sourced dataset that uses the BubbleView interface as a proxy for human gaze and a question-answering (QA) paradigm to induce different information needs in users. SalChartQA contains 74,340 answers to 6,000 questions on 3,000 visualisations. Informed by our analyses demonstrating the tight correlation between the question and visual saliency, we propose the first computational method to predict question-driven saliency on information visualisations. Our method outperforms state-of-the-art saliency models, improving several metrics, such as the correlation coefficient and the Kullback-Leibler divergence. These results show the importance of information needs for shaping attention behaviour and paving the way for new applications, such as task-driven optimisation of visualisations or explainable AI in chart question-answering.Links

Paper: wang24_chi.pdf

Supplementary Material: wang24_chi_sup.pdf

Dataset: https://darus.uni-stuttgart.de/dataset.xhtml?persistentId=doi:10.18419/darus-3884