GazeMoDiff: Gaze-guided Diffusion Model for Stochastic Human Motion Prediction

Haodong Yan, Zhiming Hu, Syn Schmitt, Andreas Bulling

arXiv:2312.12090, pp. 1–10, 2023.

Abstract

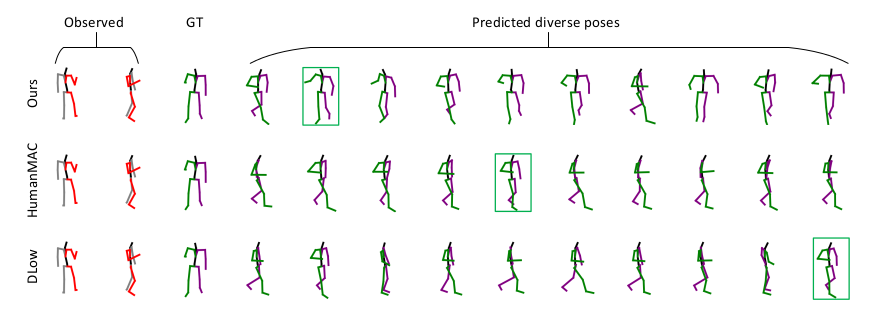

Human motion prediction is important for virtual reality (VR) applications, e.g., for realistic avatar animation. Existing methods have synthesised body motion only from observed past motion, despite the fact that human gaze is known to correlate strongly with body movements and is readily available in recent VR headsets. We present GazeMoDiff – a novel gaze-guided denoising diffusion model to generate stochastic human motions. Our method first uses a graph attention network to learn the spatio-temporal correlations between eye gaze and human movements and to fuse them into cross-modal gaze-motion features. These cross-modal features are injected into a noise prediction network via a cross-attention mechanism and progressively denoised to generate realistic human full-body motions. Experimental results on the MoGaze and GIMO datasets demonstrate that our method outperforms the state-of-the-art methods by a large margin in terms of average displacement error (15.03% on MoGaze and 9.20% on GIMO). We further conducted an online user study to compare our method with state-of-the-art methods and the responses from 23 participants validate that the motions generated by our method are more realistic than those from other methods. Taken together, our work makes a first important step towards gaze-guided stochastic human motion prediction and guides future work on this important topic in VR research.Links

Paper: yan23_arxiv.pdf

Paper Access: https://arxiv.org/abs/2312.12090

BibTeX

@techreport{yan23_arxiv,

author = {Yan, Haodong and Hu, Zhiming and Schmitt, Syn and Bulling, Andreas},

title = {GazeMoDiff: Gaze-guided Diffusion Model for Stochastic Human Motion Prediction},

year = {2023},

pages = {1--10},

url = {https://arxiv.org/abs/2312.12090}

}