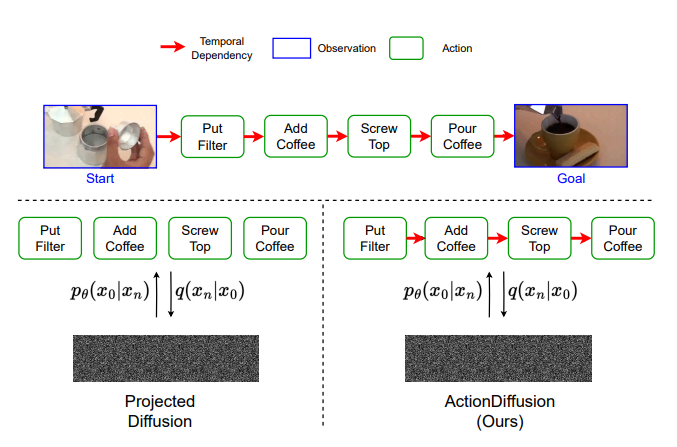

ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos

Lei Shi, Paul Burkner, Andreas Bulling

arXiv:2403.08591, pp. 1–6, 2024.

Abstract

We present ActionDiffusion – a novel diffusion model for procedure planning in instructional videos that is the first to take temporal inter-dependencies between actions into account in a diffusion model for procedure planning. This approach is in stark contrast to existing methods that fail to exploit the rich information content available in the particular order in which actions are performed. Our method unifies the learning of temporal dependencies between actions and denoising of the action plan in the diffusion process by projecting the action information into the noise space. This is achieved 1) by adding action embeddings in the noise masks in the noiseadding phase and 2) by introducing an attention mechanism in the noise prediction network to learn the correlations between different action steps. We report extensive experiments on three instructional video benchmark datasets (CrossTask, Coin, and NIV) and show that our method outperforms previous state-of-the-art methods on all metrics on CrossTask and NIV and all metrics except accuracy on Coin dataset. We show that by adding action embeddings into the noise mask the diffusion model can better learn action temporal dependencies and increase the performances on procedure planningLinks

Paper Access: https://arxiv.org/abs/2403.08591

BibTeX

@techreport{shi24_arxiv,

title = {ActionDiffusion: An Action-aware Diffusion Model for Procedure Planning in Instructional Videos},

author = {Shi, Lei and Burkner, Paul and Bulling, Andreas},

year = {2024},

pages = {1--6},

url = {https://arxiv.org/abs/2403.08591}

}