Pose2Gaze: Generating Realistic Human Gaze Behaviour from Full-body Poses using an Eye-body Coordination Model

Zhiming Hu, Jiahui Xu, Syn Schmitt, Andreas Bulling

arXiv:2312.12042, pp. 1–10, 2023.

Abstract

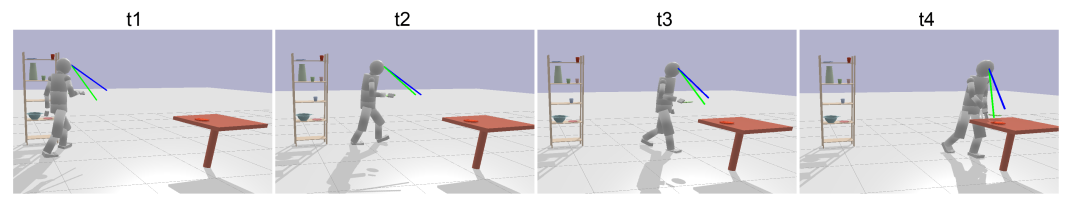

While generating realistic body movements, e.g., for avatars in virtual reality, is widely studied in computer vision and graphics, the generation of eye movements that exhibit realistic coordination with the body remains under-explored. We first report a comprehensive analysis of the coordination of human eye and full-body movements during everyday activities based on data from the MoGaze and GIMO datasets. We show that eye gaze has strong correlations with head directions and also full-body motions and there exists a noticeable time delay between body and eye movements. Inspired by the analyses, we then present Pose2Gaze – a novel eye-body coordination model that first uses a convolutional neural network and a spatio-temporal graph convolutional neural network to extract features from head directions and full-body poses respectively and then applies a convolutional neural network to generate realistic eye movements. We compare our method with state-of-the-art methods that predict eye gaze only from head movements for three different generation tasks and demonstrate that Pose2Gaze significantly outperforms these baselines on both datasets with an average improvement of 26.4% and 21.6% in mean angular error, respectively. Our findings underline the significant potential of cross-modal human gaze behaviour analysis and modelling.Links

Paper: hu23_arxiv.pdf

Paper Access: https://arxiv.org/abs/2312.12042

BibTeX

@techreport{hu23_arxiv,

author = {Hu, Zhiming and Xu, Jiahui and Schmitt, Syn and Bulling, Andreas},

title = {Pose2Gaze: Generating Realistic Human Gaze Behaviour from Full-body Poses using an Eye-body Coordination Model},

year = {2023},

pages = {1--10},

url = {https://arxiv.org/abs/2312.12042}

}